Focus On the User: Leveraging Usage Data in Search Relevancy Algorithms

Sections

- TL;DR

- Classic Information Retrieval

- Links = SEO

- Filtering Signals vs Throwing Away Data

- Augmenting Link Data With Click Data

- Activity Bias

- Domain Bias

- Navigational Searches

- Panda

- General Usage Data Usage

- Filtering Out Fake Users & Fake Usage Data

- Localizing Search Results

- Historical Data

- Engagement Bait

- Best Time Ever

- The Beatings Shall Go On...

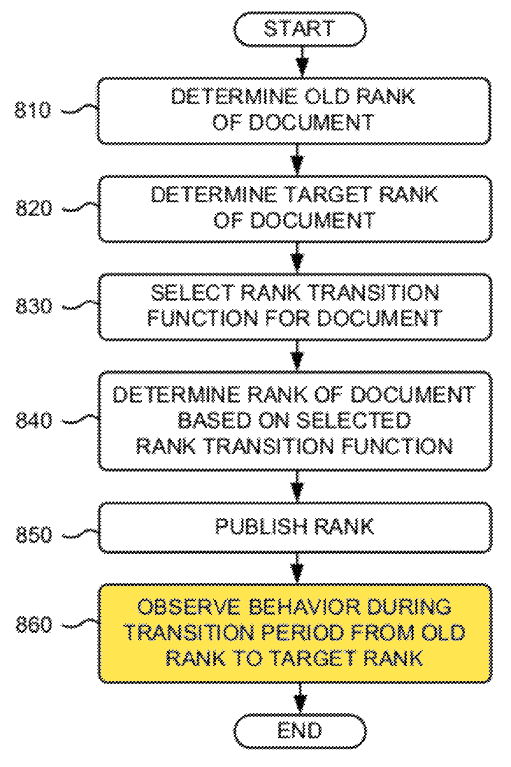

- Rank Modifying Spammers

- Gray Areas & Market Edges

- Facts & Figures

- No Site is an Island

- High Value Traffic vs Highly Engaged Traffic

- I Just Want to Make Money. Period. Exclamation Point!

- Panda & SEO Blogs

- Engagement as the New Link Building

- How Engagement is Not Like Links

- Change the Business Model

- Put Engagement Front and Center

- In Closing

TL;DR

The following article was intended to be something like 20 or 30 pages, but as I kept reading more patents I kept seeing how one would tie into the next and I sort of just kept on reading, marking them up, quoting them, and writing more. The big takeaway is that much of Google's modern algorithm is driven by engagement metrics. It might not have outright replaced links (& links can still be used for many functions like result canonicalization and generating the initial seed result set), but engagement metrics are fairly significant. To break it down into a few bullet points, Google can...

- compare branded/navigational search queries to the size of a site's link profile to boost rankings for sites which users seek out frequently & demote highly linked sites which few people actively look for

- compare branded/navigational search queries to the size of a site's overall search traffic footprint to boost rankings for sites which users seek out frequently & demote sites which few people actively look for

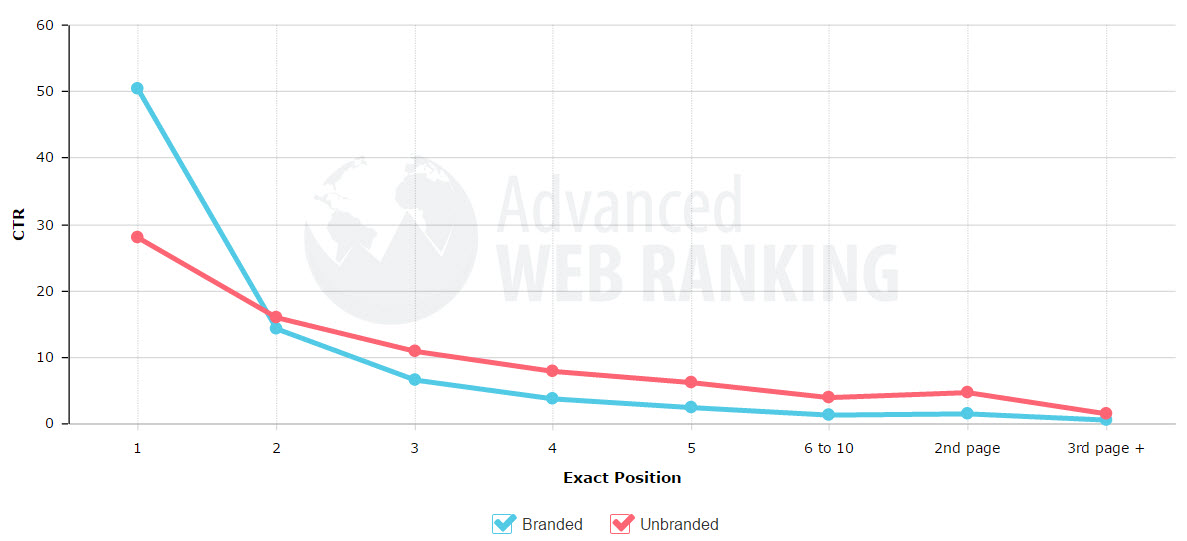

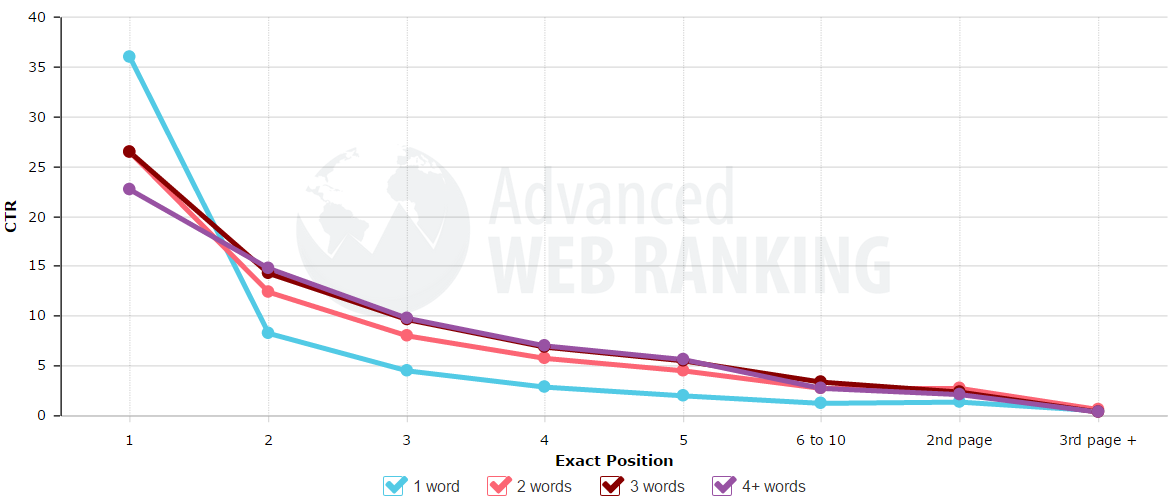

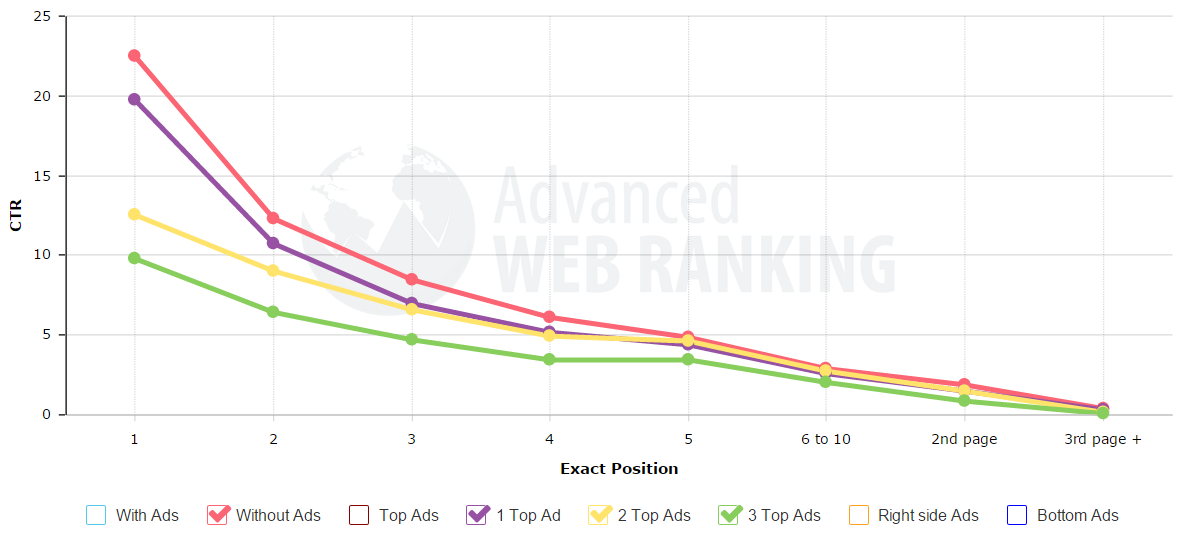

- normalize anticipated CTR on a per-keyword level & track how a site responds to auditioned ranking improvements, and if people still actively seek out & click on the result if it is pushed a bit further down the page

- boost rankings of sites which people actively click on at above normal rates

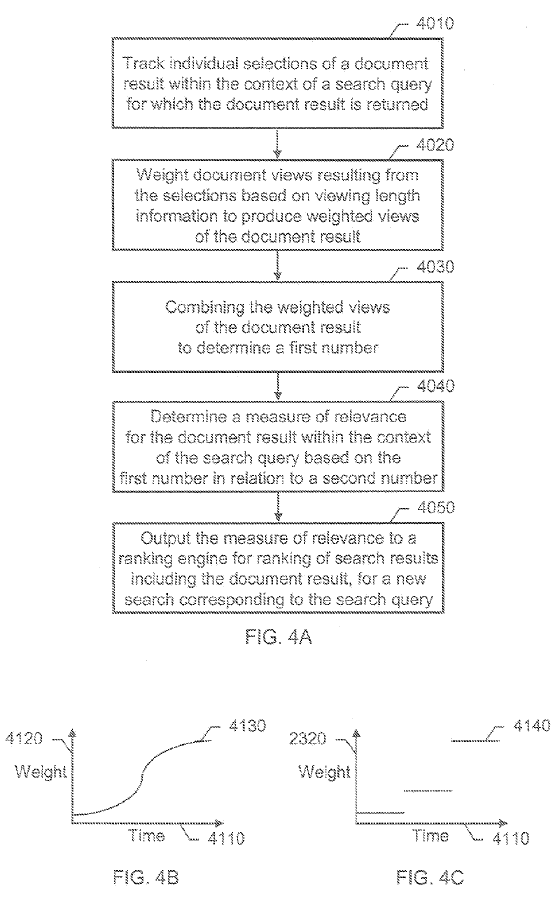

- further boost user selection data from within the SERPs by counting "long clicks" (click with a high "dwell time" - where a user clicks on them then doesn't return to the SERPs shortly after)

- demote sites & pages where people frequently click back to the SERPs shortly after clicking on them (by comparing the ratio of "long clicks" to "short clicks")

- use Chrome and Android usage data to track usage data for sites outside of the impact of SERP clicks

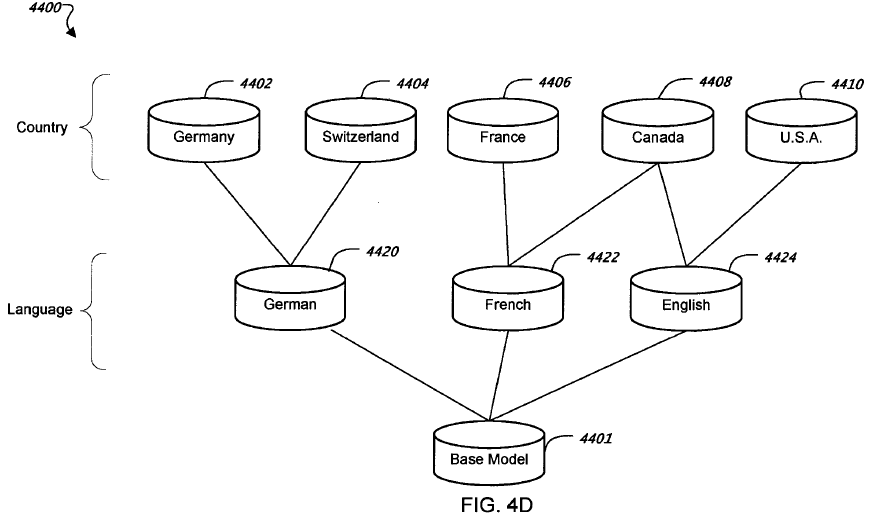

- use their regional TLDs and registered user location data to further granularize and localize their data

- have many traps built into their algorithms which harm people who focus heavily on links without putting much emphasis on engagement

The following is a review of many of Google's patents related to engagement metrics. Not all these patents may be used by Google in their ranking algorithms today, but Google tests making well over 10,000 relevancy changes a year & the 500 or 1,000 or so with desirable outcomes end up getting implemented.

Each year computing gets faster, more powerful, and cheaper. That in turn enables Google to do more advanced computations and fold more signals into their relevancy algorithms. One of Google's key innovations with their data centers is using modular low-cost hardware. But as computing keeps getting cheaper they could eventually implement in real time some algorithmic aspects they compute offline. Just like solid state drives are faster than traditional hard drives, next year Intel and Micron are expected to start selling 3D XPoint memory, which is up to 1,000 times faster than Nand flash storage in memory cards & SSDs.

Every day Google has more computing horsepower and more user data at their disposal. Things which are too computationally expensive today may eventually end up being cheap.

Classic Information Retrieval

Shortly after Amit Singhal joined Google, he rewrote some of Google's core relevancy algorithms. When he studied information retrieval he studied under Gerard Salton, whose A Theory of Indexing is a great introduction to on-page information retrieval relevancy factors. It highlights classical IR concepts like term frequency, inverse document frequency, discrimination value, etc.

The sort of IR described in that book (published in 1987) works great when there is not an adversarial role between the creator of the content within the search index and the creator of the search relevancy algorithms. However, with commercial search engines, there is an adversarial relationship. Many authors are unknown and/or are driven by competing business interests and commercial incentives. And the search engine itself has incentives to try to push as much traffic as possible through their ad system & depreciate the role of the organic search results. In some of Google's early research they concluded:

Currently, the predominant business model for commercial search engines is advertising. The goals of the advertising business model do not always correspond to providing quality search to users. For example, in our prototype search engine one of the top results for cellular phone is "The Effect of Cellular Phone Use Upon Driver Attention", a study which explains in great detail the distractions and risk associated with conversing on a cell phone while driving. This search result came up first because of its high importance as judged by the PageRank algorithm, an approximation of citation importance on the web [Page, 98]. It is clear that a search engine which was taking money for showing cellular phone ads would have difficulty justifying the page that our system returned to its paying advertisers. For this type of reason and historical experience with other media [Bagdikian 83], we expect that advertising funded search engines will be inherently biased towards the advertisers and away from the needs of the consumers.

As a sidebar, my old roommate in college ran research experiments where he mutated switchgrass using radio waves. He suggested if you could mutate switchgrass then the same types of radio waves could indeed cause cancer, but there is no source of funding for that type of research. And if there were, it would be heavily fought by Google given much of their growth in ad revenue has came from mobile ad clicks. And, as absurd as that sidebar is, it is also worth noting Google has fought against proposed distracted driving laws!

Google Inc has deployed lobbyists to persuade elected officials in Illinois, Delaware and Missouri that it is not necessary to restrict use of Google Glass behind the wheel, according to state lobbying disclosure records and interviews conducted by Reuters.

While Google hasn't become an across-the-board shill for all big businesses, they have certainly biased their "relevancy" algorithms toward things associated with brand.

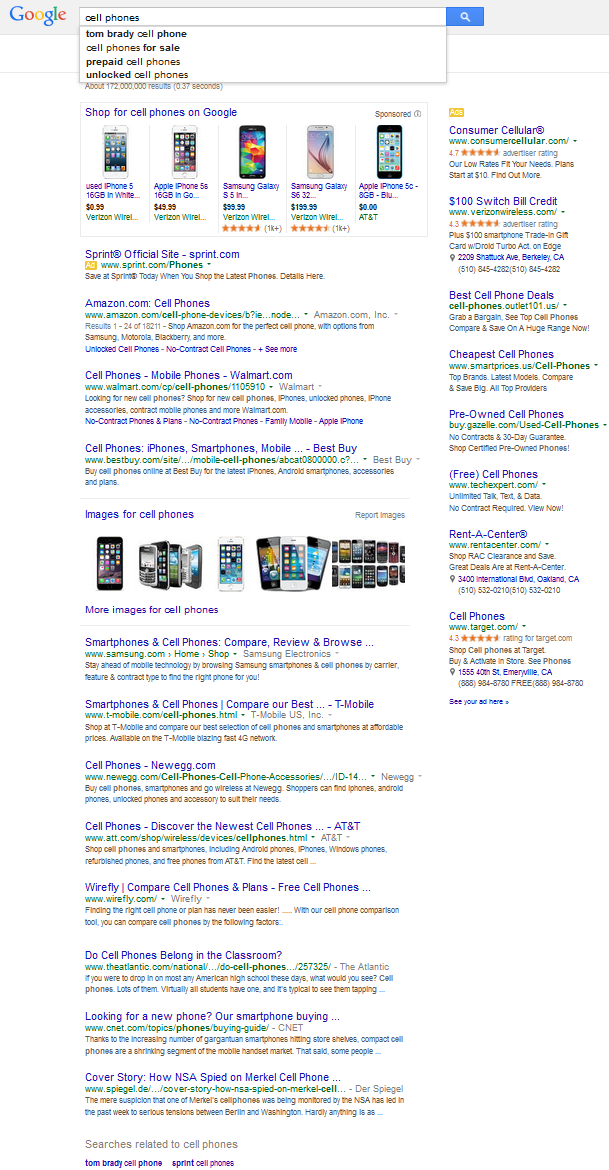

Here is a look at a current search results for [cell phones].

Note that almost every entity listing on that page is either a billion dollar company, is paying Google for an advertisement, or both. There are a few token "in-depth articles" at the bottom of the search result page, but few people will see them.

Links = SEO

PageRank

When Google was founded PageRank was a key differentiator between them and prior search services which weighed on-page factors more. I got into SEO back in 2003 & back then all you needed to rank for just about anything was a handful of keyword-rich inbound links. You could rank well in multi-billion Dollar industries within a month or two on a few thousand dollars of investment, so long as that investment went into links. TripAdvisor, which was set up as an example site to highlight the underlying features they desired to sell to other travel sites, quickly grew to a multi-billion dollar valuation on the back of paid links driven SEO success.

Back in August of 2006 I co-authored an article about link building listing many ideas (an article which is woefully outdated in the current marketplace, given Google has suggested one shouldn't even dare ask for links (though they later walked back that restraint of trade), Google now feels disavow justifies forcing people to spend on cleaning up unsolicited spam links from third parties, Google has stated they have a goal of breaking people's spirits & Google has an absurd 2-tier approach to selective enforcement where their investments are rarely penalized & if penalized it is only for a short duration).

The Death of Links = SEO

Early on in the article about link building, we quoted Brett Tabke's Robots.txt blog:

What happens to all those Wavers that think Getting Links = SEO when that majority of the Google algo is devalued in various ways? Wavers built their fortunes on "links=seo". When that goes away, the Wavers have zero to hold on to.

I think the last featured article I published in the member's area here was titled links and the glass ceiling. It tried to echo the problem with a links only view of SEO. That article wasn't published until February of 2013, so links = SEO still had a good 6 or 7 year run from when Brett Tabke warned of the mindset. ;)

Or, at the very earliest, the death of links = SEO would be February of 2011 when the Panda update rolled out. But that impacted some sites more than others & some sites managed to fly below radar for a year or two. Some smaller categories remain less impacted by Panda to this day.

In some verticals like local there might be other signals like local citations which augment links, but links are still powerful & the backbone of SEO. If they were not, we would no see so much propaganda about them. There would not have been the Penguin update, warnings against asking for links, nor all the manual link penalties. In markets with limited usage data for Google to rely on, links = SEO is still as true as it was back in 2006. Certainly Google has grown more selective and tightened down various filters, but tiny markets are tiny markets & thus have limited usage data to augment links.

Filtering Signals vs Throwing Away Data

Over the years the idea of using search CTR data to refine search relevancy scoring has been debated at great length. Typically when Matt Cutts has mentioned the topic he sort of dismisses the idea (or misdirects attention) by claiming "the data is noisy." But that Google knows the data is so noisy would indicate they've at least tested using the data. A data source being noisy doesn't mean the source must be discarded, but rather that one would need to massage away some of the noise in order to use it.

"It can be a great tool, but it's also easily manipulated. That being the case, if it were my engine, I would go out of my way to convince my enemy I would never user it. That way I could use it without the data being tainted." - Greg Boser

Google has done the same sorts of refinements with link data. They penalize overly aggressive anchor text, they try to count local links more, etc.

"in Austria, where they speak German, they were getting many more German results because the German Web is bigger, the German linkage is bigger. Or in the U.K., they were getting American results, or in India or New Zealand. So we built a team around it and we have made great strides in localization. And we have had a lot of success internationally." - Amit Singhal

That sort of filtering process on usage data has certainly been considered, given Matt Cutts has stated on algorithmic relevancy updates: "we got less spam and so it looks like people don't like the new algorithms as much."

Augmenting Link Data With Click Data

Estimating vs Direct Tracking

Google's early efforts to fold usage data into the link graph were more around re-modeling PageRank based on presumptions and likelihoods of user behavior with links rather than directly observing user behavior. For example, a 2004 patent by Jeff Dean named Ranking documents based on user behavior and/or feature data isn't really discussing using usage data from the search engine itself, but rather modeling which links are more likely or less likely to be clicked by a user reading a particular web page.

A larger font size and a link above the fold pointing into a document on a related topic in the main content area of the page is more likely to be clicked. A link in the footer to the terms of service, a link which is below the fold, a link near the bottom of a list of links, a link to an irrelevant page, or a link to a parked domain, etc. ... is less likely to get clicked.

So in spite of the above patent name, this patent was more about further adjusting the links on the link graph to estimate the probability of user behavior on that page (is a user likely to click on this link or not), rather than creating a complimentary or competing relevancy signal which could replace the importance of links.

By using signals other than links Google ensures that many players who buy a store of links (through recycling a well-linked expired domain or such) struggle to rank on a sustainable basis. If they go for big money keywords and do not have great engagement metrics then they still might get clipped by the usage data folding, even if they are missed by the remote quality raters, the search engineers, and the other algorithmic aspects.

Chrome & Android

It wasn't until Google had a broad install base for Chrome and Android that they started putting serious weight on how users interact with the results. In fact, for many searches for many years the links in the search results were coded as direct links rather than tracking URLs. The Chrome web browser launched in September of 2008 & by February of 2011 the Panda algorithm was live.

And Google isn't the only company tracking users across devices for ad targeting & attribution. Facebook has leveraged their user profiles as logins for other apps & the new Windows 10 launch is largely about cross-device user tracking for ad targeting.

Clickstream Data

One of the better publicly published blog posts in the last 5 years about SEO was the recent post by A.J. Kohn asking Is Click Through Rate a Ranking Signal? In the article he cites a couple patents and the following Tweet, which quotes Udi Manber on using click distributions to adjust rankings: "The ranking itself is affected by the click data. If we discover that, for a particular query, hypothetically, 80% of people click on Result No. 2 and only 10% click on Result No. 1, after a while we figure it out, well probably Result 2 is the one people want. So we'll switch it."

Google confirms watching clicks to evaluate results quality. FYI Google still won't say if clicks used as rank signal pic.twitter.com/jzNGc5reQk— Danny Sullivan (@dannysullivan) March 25, 2015

The Udi Manber quote came from the leaked FTC Google review document.

Bing has also admitted to using clickstream data. They not only used clickstream data from their own search engine, but Google conducted a sting operation on Bing to show Bing was leveraging clickstream data from Google search results. This is perhaps one of the reasons Google shifted to using HTTPS by default: to block competitors from being able to leverage their search data to modify user experience. While the FTC document highlighted how Google scraped Amazon sales rank data for products, an article in TheInformation later stated people like Urs Holzle "felt irked" when Google search query stream data leaked to competitors like Amazon.

Dozens of Patents, Tons of Data

If Google has a stray patent here or there about potentially using a signal, then perhaps they are not using it. But if Google has created about a dozen related patents & is blocking competitors from using the signals, there is a strong chance they are using the associated signals.

And while Google throws away the data of marketers to increase the cost and friction of marketing...

keywords don't matter, which is why Google and now Bing go to great lengths to hide them from SEOs. hint...hint.— john andrews (@searchsleuth998) June 30, 2015

... they are a pack rat with data themselves:

“It’s not about storage, it’s about what you’ll do with analytics,” said Tom Kershaw, director of product management for the Google Cloud Platform. “Never delete anything, always use data – it’s what Google does.” “Real-time intelligence is only as good as the data you can put against it,” he said. “Think how different Google would be if we couldn’t see all of the analytics around Mother’s Day for the last 15 years.”

If Google is valuing the older usage data, then that doesn't mean they're ignoring it.

Rather they are storing it and using it as a baseline to evaluate new data against. They can track historical data & use it to model the legitimacy of new content, new content sources, new usage data, new user accounts providing usage data, etc.

Activity Bias

What is Activity Bias?

There is a concept called activity bias, where by actively targeting people you end up targeting people who were more pre-disposed to taking a particular action. The net effect is correlated activities may be (mis)attributed as causal effects.

At the same time advertisers are trying to target relevant consumers to increase yield, ad networks also automatically use campaign feedback information to further drive targeting:

If only males are clicking on the ad that promote high-paying jobs, the algorithm will learn to only show those ads to males. Machine learning algorithms produce very opaque models that are very hard for humans to understand. It’s extremely difficult to determine exactly why something is being shown.

Yahoo! published a research paper on this concept named Here, There and Everywhere: Correlated Online Behaviors Can Lead to Overestimates of the Effects of Advertising. eBay later followed up with a study named Consumer Heterogeneity and Paid Search Effectiveness: A Large Scale Field Experiment.

Monetizing Activity Bias With Ad Retargeting

There is a popular concept in online marketing called remarketing/retargeting, where companies can, for example, target potential customers who put an item in an online shopping cart but didn't complete their order. Some of these ads might contain the product which was in the cart and a coupon as a call to action. For smaller websites without well known brands, using retargeting is a way to build awareness and help push people over the last little hurdle toward conversion. For larger sites like eBay many people who convert would have converted anyway (activity bias) so an optimal approach for them would be to lower ad bids on ads for people who frequently visit their site and/or have recently visited their site; while increasing bids for people who either haven't visited their site or haven't visited it in a long time.

Google was quick to counter eBay's research in a paper of their own named Impact of Ranking of Organic Search Results on the Incrementality of Search Ads, which claimed paid search exposure was highly incremental.

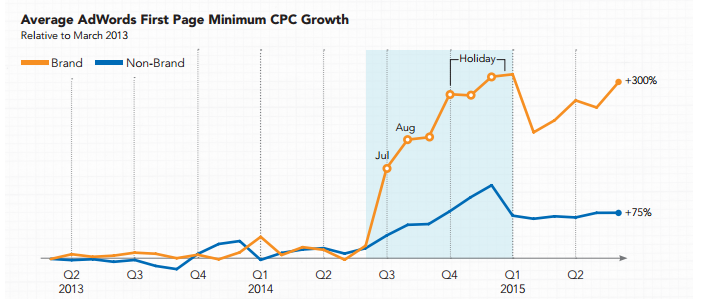

Arbitraging Brand Equity

There are some people in online marketing who paint all affiliates as scum, while viewing any/all search ad spend as worthwhile. Some affiliates add value while others arbitrage the pre-existing consumer path without adding any value. The same is sometimes true with some search ad set ups. The distinctions between traffic channels get more blurry when one is participating in a rigged auction and bidding against themselves & the auction house keeps rolling out a variety of directly competing vertical services which they grant preferential placement to. It is also worth mentioning that Google Analytic's default set up with last click attribution favors the search channel & other late-stage demand fulfillment channels over early-stage demand creation channels.

Some paid search advertising set ups have advertisers arbitraging their own brand without attempting to justify the incremental impact of the clicks. If the click prices are cheap enough (and they are often priced low enough to be a no brainer) then perhaps blocking out competition and controlling the messaging has enough value to justify the cost, but there are cases where Google has drastically increased advertiser bids when they added sitelinks, or Google has tried to push the branded search traffic through Google Shopping to charge higher rates while arbitraging the brand traffic.

Smart paid search management segments branded versus unbranded search queries, such that they can be tracked and managed separately. The reason sloppy campaigns blend things together is because some service providers charge a percent of spend, and if they can used perceived "profits" from arbitraging the customer's brand to justify bidding higher on other keywords then the higher aggregate spend means higher management fees for less work.

Cumulative Advantage

In line with the concept of activity bias, there is another concept called cumulative advantage. People often tend to like things more if they know those things are liked by others. Back in 2007 the New York Times published an article on this featuring Justin Timberlake.

The common-sense view, however, makes a big assumption: that when people make decisions about what they like, they do so independently of one another. But people almost never make decisions independently — in part because the world abounds with so many choices that we have little hope of ever finding what we want on our own; in part because we are never really sure what we want anyway; and in part because what we often want is not so much to experience the “best” of everything as it is to experience the same things as other people and thereby also experience the benefits of sharing.

When Wikipedia editors try to slag the notability of someone, a popular angle is a quote like this one: "The subject's 'notability' appears to be a Kardashianesque self-creation."

What is left unsaid in the above quote is that Wikipedia publishes a 5,000+ word profile of Kim Kardashian.

Once you are established, brand awareness can help carry you. But when you are new you have to do whatever you can for exposure. For Kim, that meant a "leaked" sex tape with a rapper. For other people it might mean begging or groveling for exposure, putting some of your best work on someone else's site to get your name out there, pushing to create some arbitrary controversies, going to absurd length in covering a topic, spending tons of time formatting artistic work, or buying ads which barely break even.

In isolation, the story John Andrews highlighted in the following Tweet sounds like a quite limited success story.

“@ellisshuman: How I Sold 910 Copies of My Book in One Week

http://t.co/EHKn6yZMz0 NOTE: He made less than $200 profit.— john andrews (@searchsleuth998) July 6, 2015

However, if you consider activity bias, any exposure the author gets by the ad network for the testimonial, any exposure the author gets from other authors who want to live the dream, etc. ... then maybe the ROI on the above isn't so bad.

Once an artist or author is well known, then they have sustained instant demand for anything they do. Once they are mired in obscurity there is no demand for their work no matter how good it is.

Many marketplaces which sell below cost to get the word out end up going under. Most start ups fail. But if your costs are low & you are just starting out & are not well known, then anything which approaches break even while getting your name out there might be a win.

Domain Bias

Brands vs Generics

Bringing things back to the search market, domain names & branding are highly important. One of my old business partners is an absolutely brilliant marketer who worked for some of the big ad agencies and one of the points he made to me long ago was that when markets are new and barriers to entry are low one can easily win by being generically descriptive. Simply being relevant is enough, because relevancy creates affinity when there are few options to select from. Call this the "good enough" scenario. But as competition in markets heats up one needs to build brand equity to differentiate their offering and have sustainable profit margins.

There are a couple dimensions worth mentioning in how this applies to search.

- If a market is "new" there might not be enough scale to justify aggressive brand related marketing, thus one can succeed by entering the market early with a generic name & regular content creation. It is much harder to enter

- In some smaller local markets where there is less usage data (say Pizza shops in Ottawa, IL; or a niche in geographic markets where advertising is less prevalent than in the US) one can still win with a generic name. You can rank locally for Pizza, but it is hard to beat a big brand pizza chain like Dominos in ranking broadly across the country.

- When the search relevancy algorithms & Google's data hoard & computational power were more limited, more weight was placed on domain names which matched keywords. But as Google added other relevancy signals & verticalized search, those additional signals and channels lowered the value of generic domain names.

Brand & Profit Margins

To appreciate how hard it is to have sustainable profit margins without a strong brand, consider how some of the Chinese manufacturers improved their profit margins during the 2015 Chinese stock bubble:

“According to the latest official data, profits earned by Chinese manufacturers rose 2.6% from a year earlier in April, a turnaround from a drop of 0.4% in the previous month. Yet nearly all of that increase—97%—came from securities investment income, data from the National Bureau of Statistics show. Excluding the investment income, China’s industrial profits were up 0.09%. ... "Manufacturing is a very hard business these days,” said Mr. Dong, chairman of the company. “I want to make some money from the stock market and use the profits to restart my manufacturing business later, when the economy turns for the better.”

Manufacturers which couldn't turn a profit instead used their cashflow to gamble in the stock market. Those gains then get reported as earnings growth for the underlying companies. And some of the companies were pledging their own stock as collateral to gamble on the stock market.

An Introduction to Domain Bias

Microsoft put out a research paper titled Domain Bias in Web Search. In that paper they noted users had a propensity to select results from domain names of brands they were already aware of. The bias they are speaking of is more toward sites like Yahoo.com or WebMD.com than a generic name like OnlineAuctions.com or WebPortal.com.

Viewing content on the Internet as products, domains have emerged as brands.

Their study found that the domain name itself could flip a user's preference for what they viewed as more relevant about 25% of the time.

This feedback from users not only drives higher traffic (and thus revenues) to the associated branded sites, but it also creates relevancy signals which fold back into the rankings for subsequent searchers

the click logs have been proposed as a substitute for human judgements. Clicks are a relatively free, implicit source of user feedback. Finding the right way to exploit clicks is crucial to designing an improved search engine.

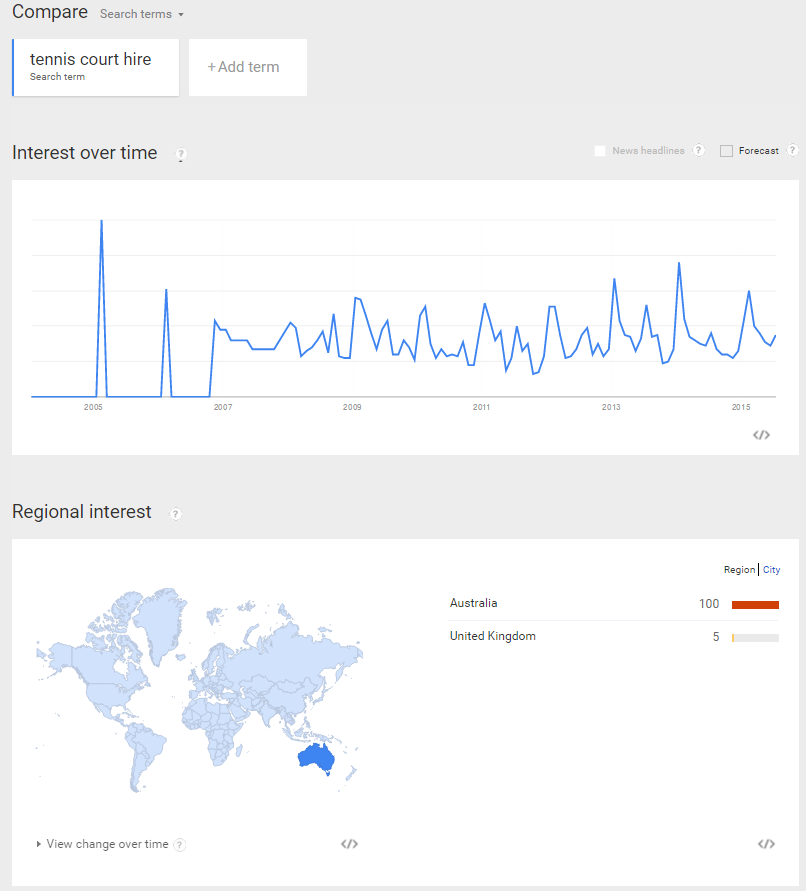

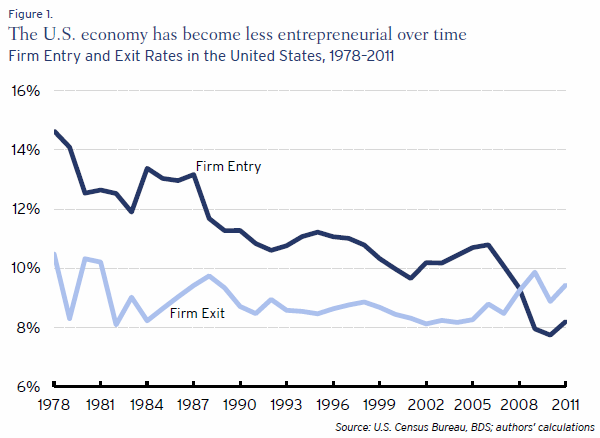

The study compared data from 2009 & 2010 and found that over time the percent of queries which were navigational was increasing. And even outside of the navigational queries, traffic was consolidating onto a smaller set of known domains over time due to user preference for known branded sites. Over time, as people build habits (like buying certain product categories from Amazon.com) the established user habits shift search query types.

Domain bias also affects how queries are categorized as navigational vs. informational. As user visits concentrate on fewer domains, former informational queries may now appear navigational. ... One common approach for determining whether a query is navigational is based on the entropy of its clicks. As domain bias becomes stronger, user visits are concentrated on fewer domains, even for informational queries. Algorithms for distinguishing between informational and navigational queries may have to be revisited in the presence of domain bias.

Once our habits are in place, even if the rankings shift a bit, it is hard to change our habits. So if the Amazon.com page ranks a couple spots lower but is still our go-to spot for a particular item, we will still likely click on that listing & give Bing or Google the signal that we like that particular result.

Branded Searches Replace Direct Navigation

The above noted increasing proportion of navigational searches was a trend noticed back in 2010. That was before the rise of mobile search, before Chrome & other web browsers replaced address bars with multi-function search boxes, etc. A few years back in the forums DennisG mentioned how a greater share of what were formerly direct visits to eBay became branded search queries. Search effectively replaced direct navigation (or typing in domain names) for many consumers.

And the consolidation of search volumes on large marketplaces has only increased over the years due to a broad array of factors. Smaller ecommerce businesses competed primarily via enjoying artificially high exposure in the search channel, but that has changed.

- user awareness of and preference for larger sites

- less weight on legacy search relevancy signals like link anchor text

- increasing penalties for smaller sites: manual penalties, Panda, Penguin, etc.

- greater weight on usage data & engagement-related relevancy signals

- larger search ad units further displacing the organic result set

- higher search ad prices killing the margins on smaller businesses with more limited inventory & lower lifetime customer values

- the insertion of vertical search ads in key areas like ecommerce

Traffic Consolidation

One of my old small ecommerce clients at one point outranked the brand he sold on their own branded keywords. Those were the days! :)

But in less than 5 years he went from being one of the largest sellers of that company (& over 10% of their sales volume) to having his sales shrink so much that he took his site offline. After there were 3 AdWords ads above the organic results with sitelinks, Google launched product listing ads & the branded site got 6 sitelinks, the remaining organic results were essentially pushed below the fold.

The above story has played out over and over again. You can read the individual stories or see it in the aggregate metrics: "Apart from Amazon—which has long spurned profits in favour of growth—most pure-play online retailers are losing market share, says Sucharita Mulpuru of Forrester Research."

To isolate the impact of navigational searches on the study, they only analyzed keywords where pages were seen as roughly similar relevancy. They had people rate the snippets without the domain names & then with the domain names, and the domain name itself had a significant impact. Anything which is a known quantity which we have experience with is typically seen as a lower risk option than an unknown. And search engines think the same way "A related line of research is on the bias of search engines on page popularity. Cho and Roy observed that search engines penalized newly created pages by giving higher rankings to the current popular pages." Of course, search engines try to correct for some such biases using things like a spike in search volume or a large diverse collection of new news stories to trigger query deserves freshness to rank some fresh pages from news sites.

For a search engine to rank a new page on an old trusted site there isn't a lot of risk. For a search engine to rank a new site they are taking a big risk in ranking a fairly unknown quantity: "While there is an increasing volume of content on the web and an increasing number of sites, search engine results tend to concentrate on increasingly fewer domains!"

The Risk of Vertical Aggregators (to Google)

Whereas they are taking minimal risk in ranking a page on Amazon.com or WebMD. The result on a large trusted site is likely to be good enough to satisfy a user's interest and is a risk-free option for search engines. In fact, the only risk to search engines in over-promoting a few known vertical sites is that they might reinforce the user preference to such a degree that they increase the power of the vertical search providers.

The leaked FTC memorandum on Google quoted some internal Google communications:

"Some vertical aggregators are building brands and garnering an increasing % of traffic directly (vs. through Google); ... Strong content is improving aggregator organic ranking.~ and generating higher quality scores, giving them more free and/or low CPC traffic; . . A growing% of finance & travel category queries are navigational vs. generic (e.g., southwest. com vs. cheap airfare). This demonstrates the power of these brands and risk to our monetizable traffic." "Vertical Aggregators taking higher share of last clicks before sale," and "merchants increasing % of spend on aggregators vs. Google"

The reaction to the above fear is incidentally why Google got hit by antitrust regulators in Europe. Some notes from that leaked FTC memorandum:

- "The bizrate/nextag/epinions pages are decently good results. They are usually well-format[t]ed, rarely broken, load quickly and usually on-topic. Raters tend to like them" ... thus they decided to ... "Google repeatedly changed the instructions for raters until raters assessed Google's services favorably"

- “most of us on geo [Google Local] think we won't win unless we can inject a lot more of local directly into google results” ... thus they decided to ... "“add a 'concurring sites' signal to bias ourselves toward triggering [display of a Google local service] when a local-oriented aggregator site (i.e. Citysearch) shows up in the web results”"

The above displacement of general web search with vertical search is one of the reasons I though brand-related signals might peak. That thesis was not correct. What what was incomplete in that analysis was "the visual layout of the search result page trumps the underlying ranking algorithms," something I was well aware of back in 2009, though I failed to realize Google was eventually going to force the brands to pay premium rates for their own branded keywords by inserting other ad types driven by alternative pricing metrics. Earlier attempts to get brands to pay for banners on their own branded terms failed to monetize as well as Google hoped.

Helpful, but Still Spam

Some of Google's remote rater guidelines have explicitly mentioned a variety of ways to disintermediate other aggregators. Perhaps the most egregious example was when Google suggested HELPFUL hotel affiliate sites shall be rated as spam, but they have also had other more subtle instructions. For example, they have mentioned when search results ask for a list of options that the rater could consider the search result page itself as that list of options. And then of course there are all of Google's vertical search offerings, YouTube, the Play store, the knowledge graph, and answers scraped from pages formatted as featured results.

Google's decisions to launch new features & displace the result set are not algorithmically driven, but are executive business decisions. Many of the ranking signals are designed primarily around anti-competitive business interests.

And while the big decisions are made by the executives, Google falls back on "the algorithm" anytime there are complaints: “The amoral status of an algorithm does not negate its effects on society.”

The above referenced Microsoft research paper on domain bias concludes with

Domain bias has led users to concentrate their web visits on fewer and fewer domains. It is debatable whether this trend is conductive to the health and growth of the web in the long run. Will users be better off if only a handful of reputable domains remain? We leave it as a tantalizing question for the reader.

Navigational Searches

The Promotion of Brands Expressed in User Habits

The above mentioned Microsoft research paper mentions "the existence of domain bias has numerous consequences including, for example, the importance of discounting click activity from reputable domains."

Google itself has to a large degree moved in the opposite direction. There are some search queries where an unbranded site with decent usage metrics but little brand awareness might require 500 or 1,000 unique linking domains to be able to rank somewhere on the first page of the search results. In some cases on that same SERP, a thin lead generation styled page with obtrusive pop ups & no inbound links on a large brand site will outrank the smaller independent site.

Without folding in engagement metrics, there is almost no way the ugly pop up ridden page on a big brand site would outrank that independent site with a far better on-page user experience.

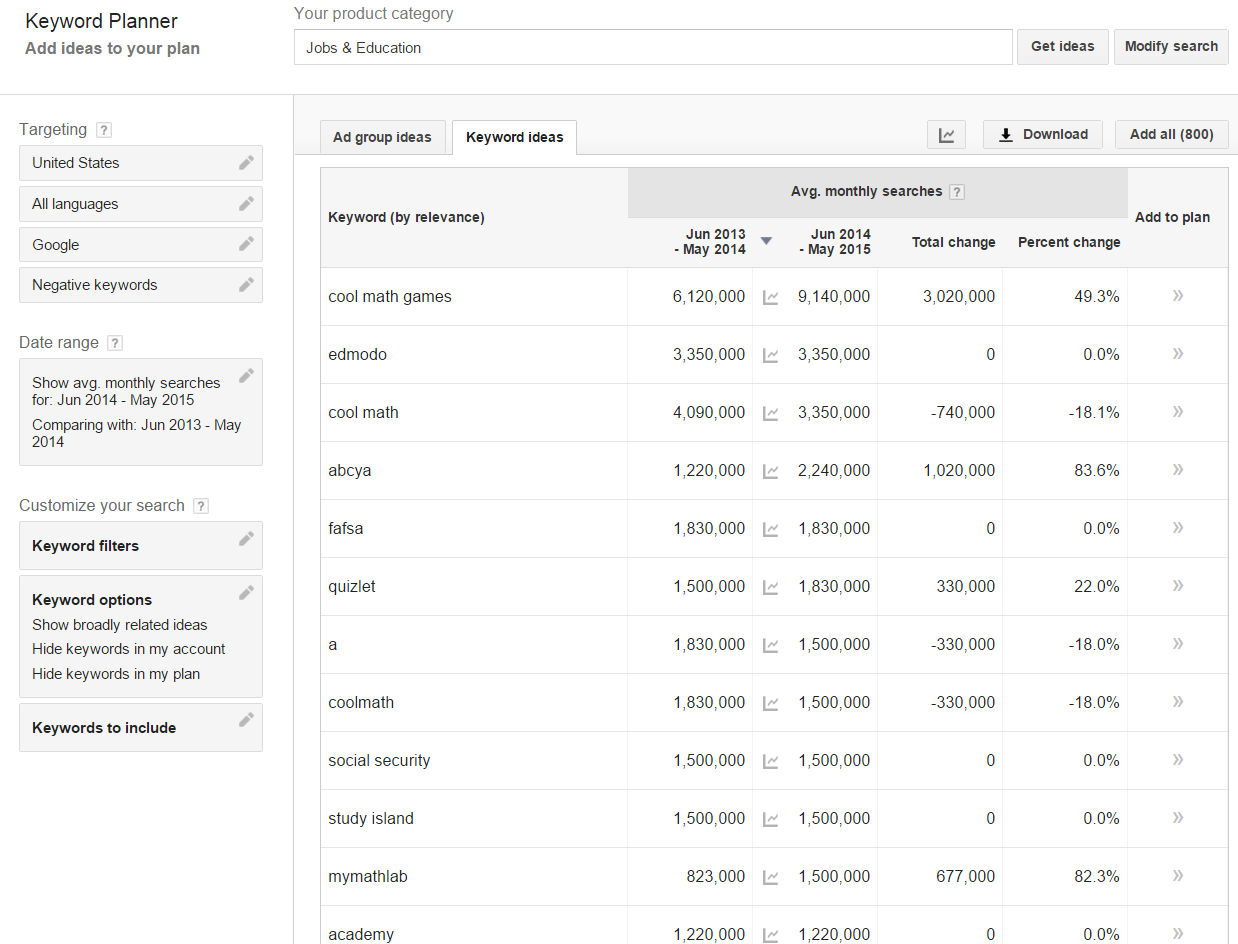

While it may be cheap to try to fake some brand-related inputs at a smaller scale, it would be quite expensive to do it at a larger scale while making the profiles look natural, as many of the most popular keywords are navigational branded terms. The Google AdWords keyword tool shows popular keywords by category & with the the jobs and education category there are millions of monthly searches for branded terms like edmodo and cool math games.

How prevalent are navigational queries or reference queries associated with known entities? Is there enough search volume there to create relevancy signals? According to Microsoft Research:

at least 20-30% of queries submitted to Bing search are simply name entities, and it is reported 71% of queries contain name entities.

Shortening Query Chains

Google uses navigational search queries as a relevancy signal in a number of ways. When the Vince update happened Google explicitly referenced it being associated with query chains. Part of the hint that query chains were at play was some of the larger social sites like Facebook were showing up as related queries where they wouldn't be expected to. As the social network was a common destination when people were done with other tasks, Google incorrectly folded a relationship in with Facebook and other search queries.

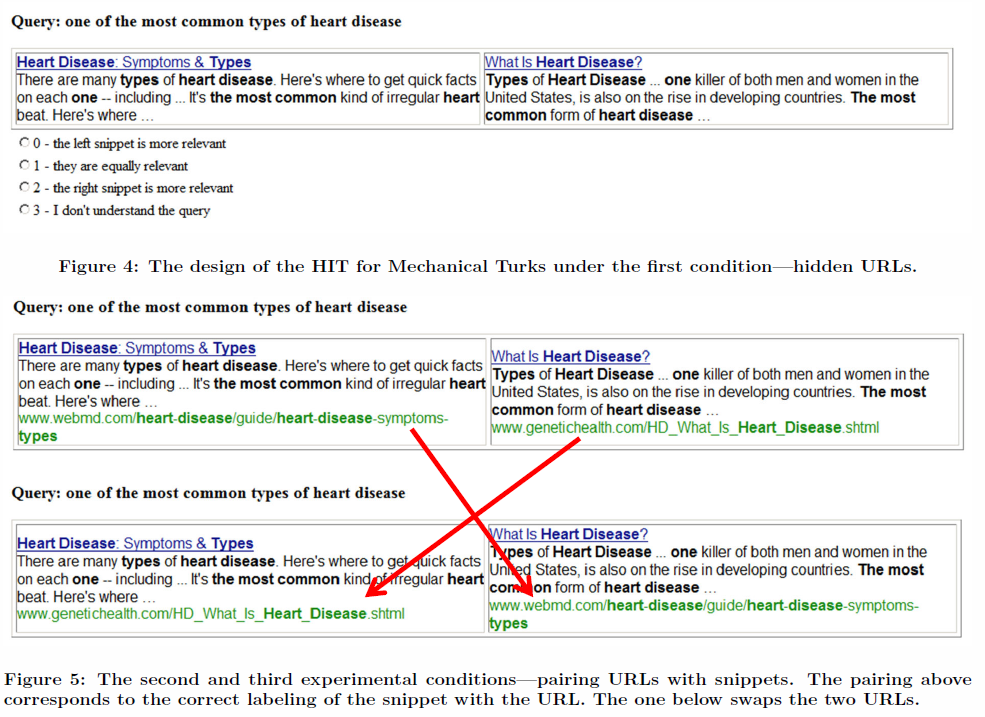

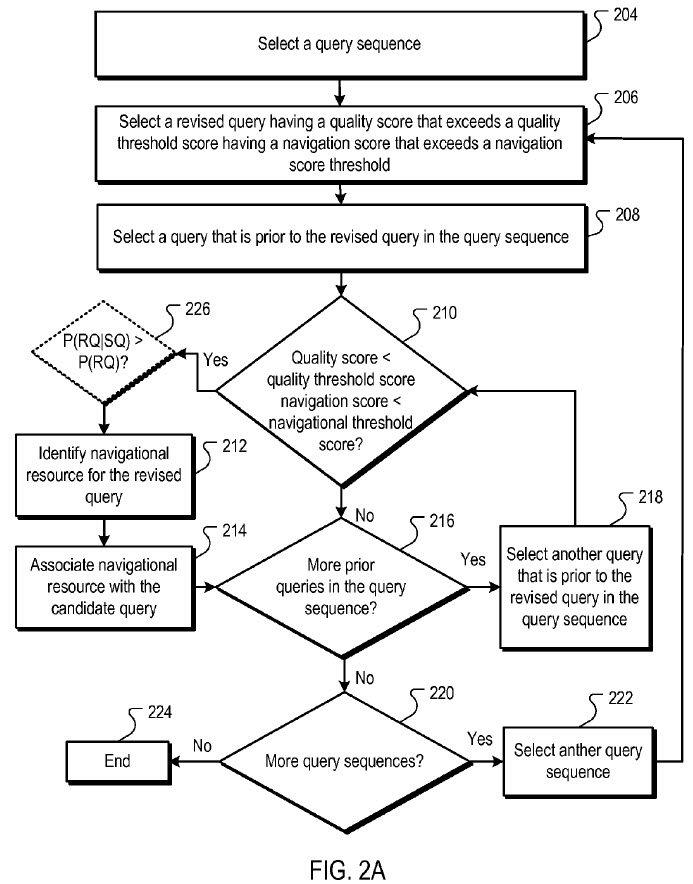

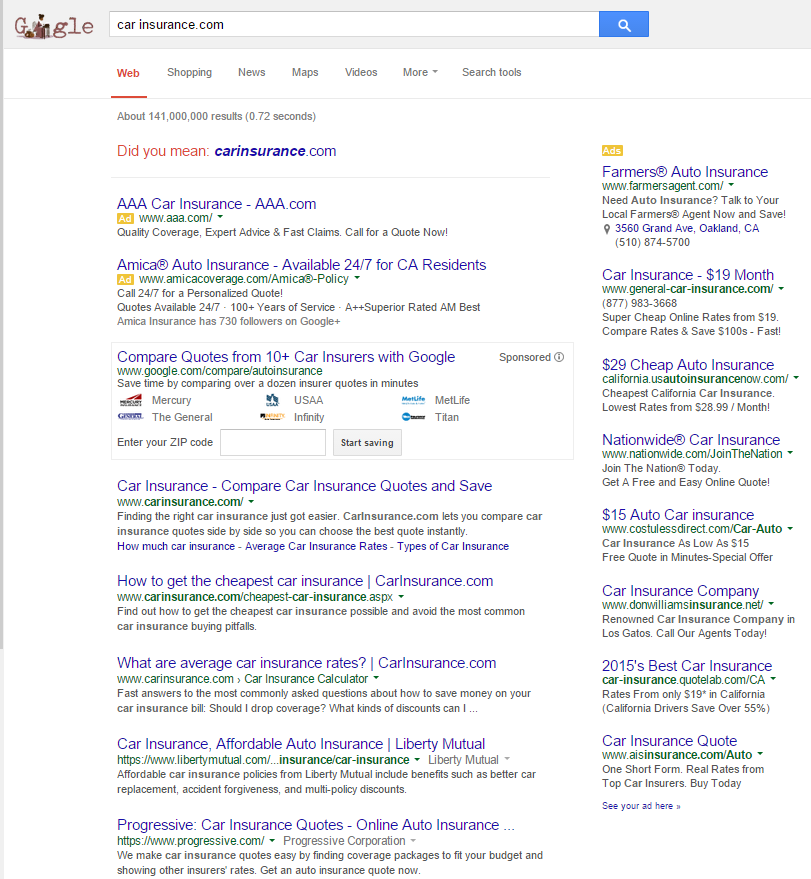

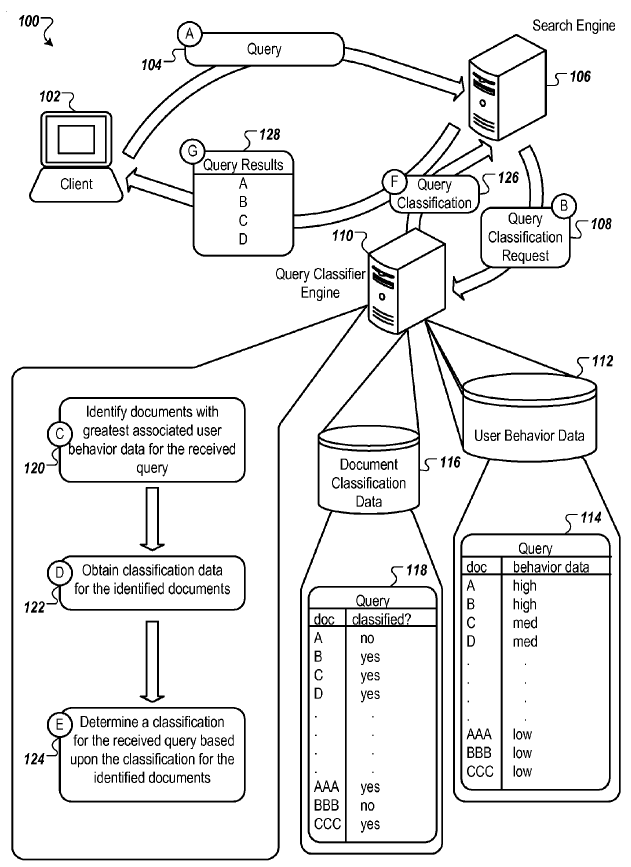

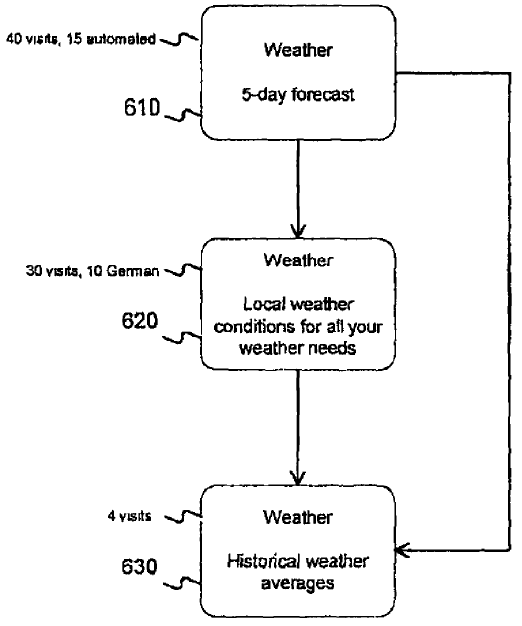

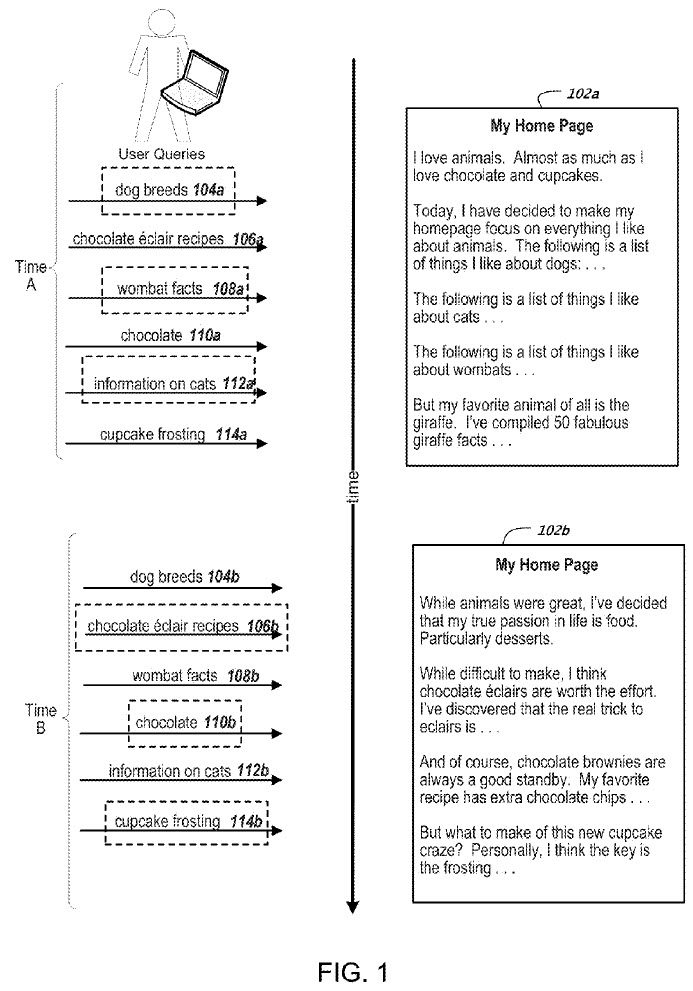

In 2009 Google applied for a patent named Navigational Resources for Queries. Here is an image from the patent.

And the abstract states:

Methods, systems and apparatus, including computer program products, for identifying navigational resources for queries. In an aspect, a candidate query in a query sequence is selected, and a revised query subsequent to the candidate query in the query sequence is selected. If a quality score for the revised query is greater than a navigational score threshold, then a navigational resource for the revised query is identified and associated with the candidate query. The association specifies the navigational resource as being relevant to the candidate query in a search operation.

In the past Microsoft offered a search funnel tool which showed the terms people would search for after searching for an initial query.

For searches where Google doesn't have much data they have less confidence in the result quality, so they try to shift people toward more common related queries. They do this in multiple ways including automated spell suggestions & using their dictionary behind the scenes to associate like terms with like meanings. This also has the benefit of increasing the yield of search ads as it makes it easier to return relevant ads & auction participants are pushed to compete on a tighter range of search queries.

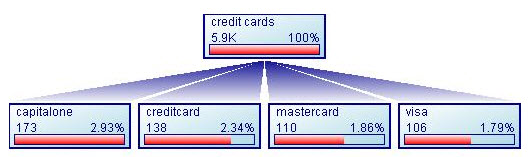

While the above image only shows a single directional chain, some query refinement chains might have 3 or 4 or 5 steps in them, with searchers starting at a generic informational search and ending up at a navigational search query. Conceptually it might be something like:

- how to get a credit card

- best credit cards

- credit cards available to students with no credit

- top student credit cards

- Visa student card

Google can determine the associations of the queries based on sharing common terms or word stems or synonyms, the edit distance between the terms, the time between each query modification within a search session (less time between queries is generally seen as a greater signal of association), and if people typing a subsequent query are more likely to type that particular query after typing one of the related earlier queries.

Many people searching for [credit card] related terms eventually type a search query which contains Visa and/or MasterCard in it. Once Google determines Visa and MasterCard are relevant to topics like [top student credit cards] they can also re-run the same process and determine if they are also relevant to other queries earlier in the chain.

Other navigational search queries may be far more popular than Visa and MasterCard, but not as popular in the specific process or sequence. For example, if 3% of total global search volume is for a navigational term like Facebook, then Facebook.com will have a high navigational score and their links will also give them a high authority score. But if after completing the above mentioned credit card task, only 0.7% of people want to check out Facebook real quick, then Google should not try to associate Facebook into the above stream, because that 0.7% is less than the 3% of the total search market which the search query [Facebook] represents.

Facebook is a navigational query and Facebook.com is the navigational resource associated with that navigational query, but Facebook is not a navigational query relevant to credit card searches.

Google's work on understanding entities and word relations further augments their efforts to shorten query chains by allowing pages on authoritative sites to rank for many conceptually related longtail queries even if some of the words are not included within the page or in inbound link text pointing at the page. The downside of these algorithms is not just that they scrub away ecosystem diversity, but they also make it hard to find some background information about broad entities (say Google, Facebook, Amazon, etc.) because marginally related topical pages on the official entity site itself will outrank many third party sources writing about specific aspects of the entity or platform.

How does Google know if a query is navigational?

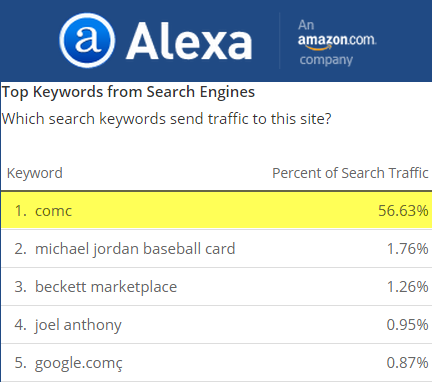

There are a variety of signals Google can use, including things like: domain name matching a keyword, anchor text distributions, quality & quantity of links to a site from unaffiliated domains, other ranked sites for a query linking to a specific site (inter-connectivity of the initial search result set / the topic referenced in Hilltop), percent of searches which conclude a search session, the click distribution among searchers for a query and the percent of search traffic into a site which contain a specific term. Navigational or branded searches tend to have many clicks on the top ranked results, whereas informational searches tend to have a more diffuse click profile deeper into the result set.

The last signal is probably the most powerful one for bigger brands. If millions of people are searching for something, almost all of them are selecting a specific site, and many of them are repeatedly selecting the same site - they are sending a clear message, saying "this is what I want."

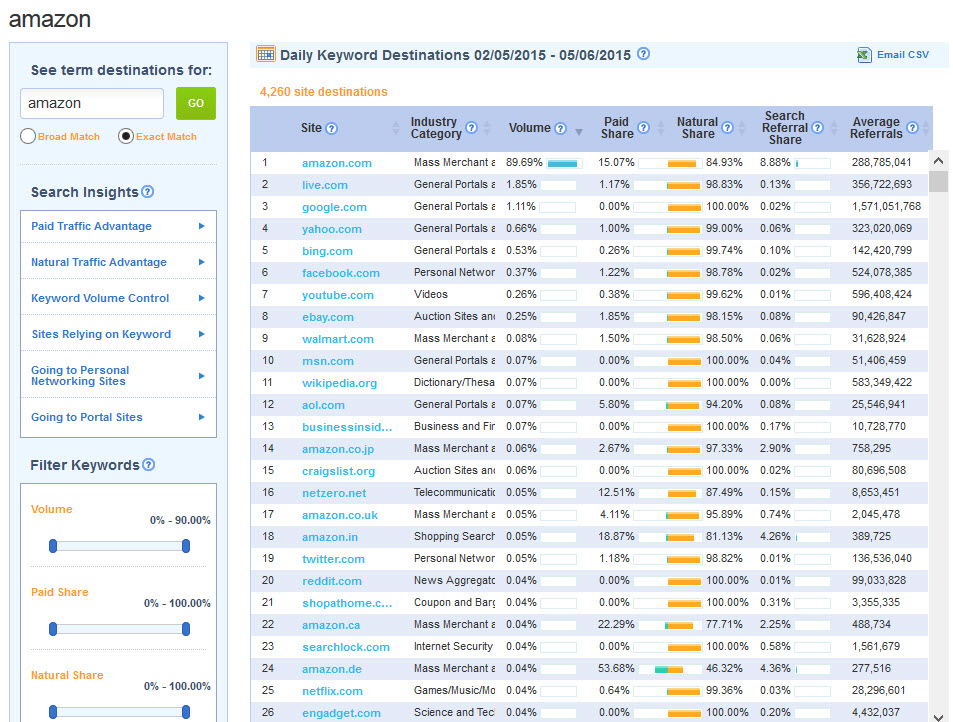

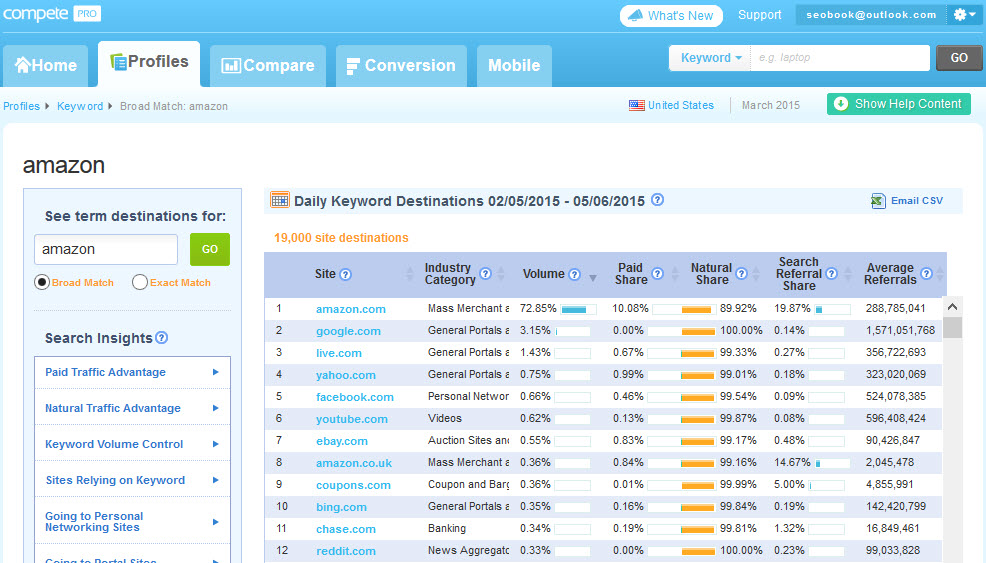

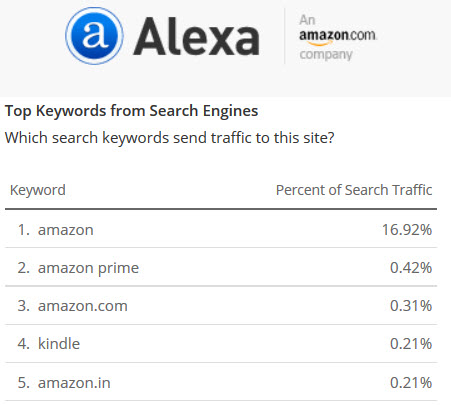

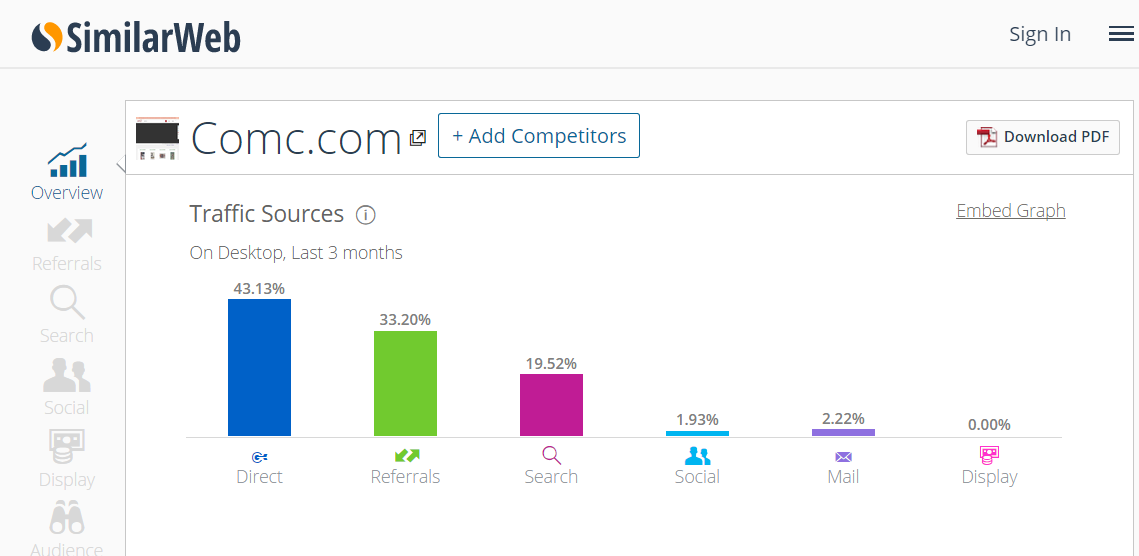

According to Compete.com, about 90% of the people in the US who type [amazon] into a search box end up clicking on Amazon.com. And that number likely skew significantly low of the true CTR.

- some searches are siphoned off by search boxes in the address bar & thus don't get reported as going through the search engines

- some of the other sites which show as the "next" click are broad web portals, which could mean things like people clicked the link to Amazon to open in a new window and then later did another search, or they clicked through some links which had some tracking redirects on them, or people clicked back into the SERP after they did a quick visit on Amazon (perhaps they did a quick visit to see the price of an item they were about to buy in a physical store & wanted to see if the cost savings of buying online were sufficient to justify waiting to get the item)

From the above Compete.com screenshot you can see almost 10% of the people in the US who visit Amazon.com from search do so by specifically searching for [amazon]. When the term is broad matched, that 10% jumps to about 20%. So not only are over 10 million people searching for Amazon each month, but then another 10+ million are searching for a variety of other queries like [Amazon.com Tide laundry detergent pods].

Generic vs Brand

The issue with brand-based signals in competitive markets where brand signals play a large role, is ot hard to be both a generic industry term and a brand name unless your product creates a new category: like Post-it, Kleenex & Zerox.

| Industry Generic | Brand |

| Auction.com, Auctions.com, OnlineAuction.com | eBay |

| Search.com SearchEngine.com | Google, Bing |

| Portal.com WebPortal.com | Yahoo!, MSN, AOL |

| Store.com OnlineStore.com | Amazon.com |

| Insure.com Insurance.com AutoInsurance.com CarInsurance.com | Geico, Progressive, AllState, Esurance |

The branded terms which are not generic can afford to advertise in many different mediums from humorous TV commercials or YouTube videos (like Geico does), banners across the web, social media ads, paid search ads, retargeting/remarketing ads, billboards, radio ads, etc. Any form of exposure which drives brand awareness and search volume then creates an associated relevancy signal.

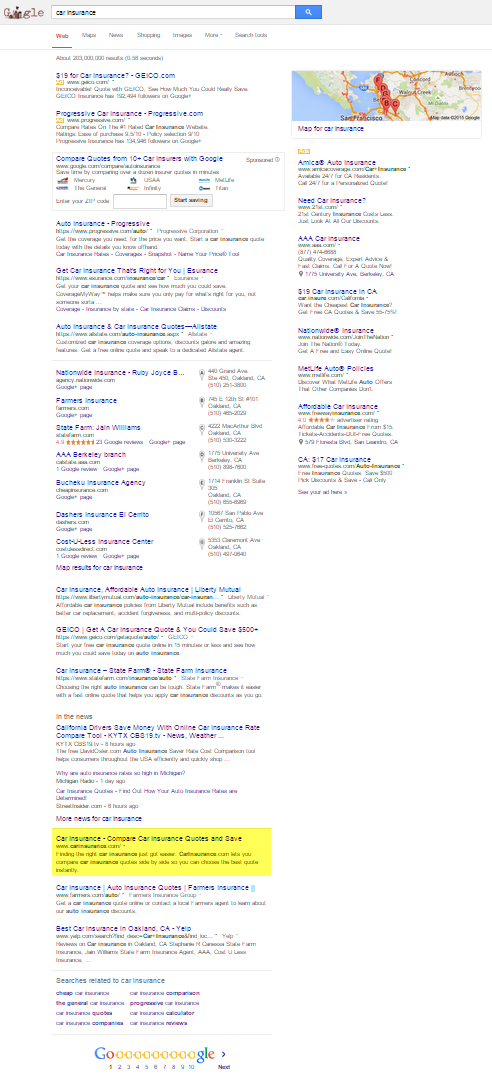

But if you own a site like CarInsurance.com, even if you get a lot of people to search for the term you are targeting [car insurance] that is unlikely to count as a brand-related signal for your site, because Google can run many ads on that search and the site CarInsurance.com would only get a tiny minority of the search click volume, while the majority of it is monetized by Google.

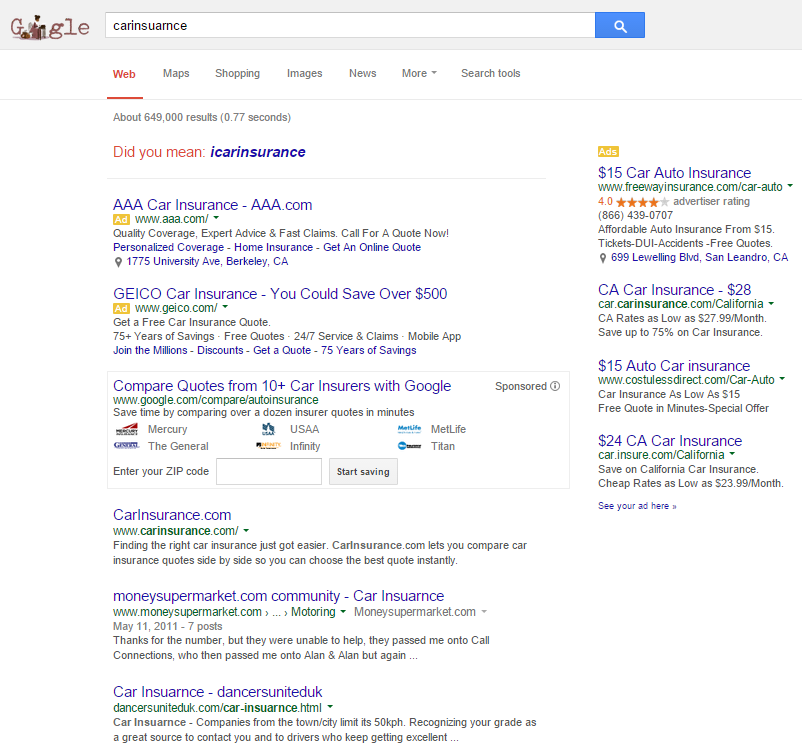

Look how small I had to make the font to get CarInsurance.com to appear above the fold. That Google puts the result so far down the SERP is one indication they don't view the term [car insurance] as a branded term.

In most cases (with a few rare exceptions) Google won't consider that type of search term as having navigational intent UNLESS you run the words together...

...or add the domain extension to the search...

...or do both.

But in the current search ecosystem, few people who are searching for the generic term will run the words together or add the TLD to the search query. Fewer still will do both. And, even when they do, Google still aggressively places ads before the algorithmically determined navigational result.

You can see from the above search results how Google goes from viewing the query as industry generic in the first search, on through to considering potential navigational intent on the middle queries, on through to being certain of a navigational intent on the last query (as indicated by the sitelinks). But even when there is navigational intent, the term is so close to other industry terms that Google puts their AdWords ads and vertical search results above the organic search results. That means that even if you own that sort of domain name and try to do any sort of brand advertising, if you get people to search for you, you need them to search for the niche navigational variation with the words ran together & the TLD added over the core industry term, and even then you still have to pray they don't click on the ads above the organic search results.

In the modern search ecosystem there is almost no way for a site like CarInsurance.com to create the brand-related signals to outrank Geico on the core industry terms without accruing a penalty in the process (unless Google invests in them and doesn't enforce the guidelines on them). And even if they somehow manage to outrank them without accruing a penalty, they are still below a bunch of ads, and a negative SEO push could easily drive them into a penalty from there.

Before the Panda update the SEO process (even for exceptionally compet might look something like:

- general market research

- keyword research

- pick a domain name tied to a great keyword

- create keyword-focused content

- build exposure & awareness through organic search rankings

- use the profit generated to build more featured content and awareness

...but now with the brand / engagement metrics folding, the SEO process might look more like:

- general market research

- determine a market or marketing gap based on the strengths and weaknesses of competitors

- buy a brandable domain name which leans into the perception and messaging in terms of the point of differentiation

- create featured editorial built around pulling in attention and awareness

- use that brand awareness and loyalty to build keyword focused content targeting commercial terms

I think the person who summed up the shift best was Sugarrae when she wrote: "Google doesn’t want to make websites popular, they want to rank popular websites. If you don’t understand the difference, you’re in for one hell of an uphill climb."

And with Panda updates shifting to becoming slow rolling roll outs, it will become much harder to know what is wrong if one is riding the line.

Google as Aggregator, Disintermediating Niche Businesses & Review Sites

As mentioned in an earlier section, Google's remote rater guidelines mentioned when search results ask for a list of options that the rater could consider the search result page itself as that list of options. Google's goal is to shorten query chains as much as possible. This effectively squeezes out many of the smaller players while subsidizing some of the larger known brands. If they could have gotten away with stealing reviews in perpetuity, they would have quickly displaced Yelp and TripAdvisor too. Anything where there is online activity associated with scheduling offline economic behavior is something Google wants to own and displace others from. They'll start with some of the most profitable markets (hotels, flights, auto insurance, financial products, etc.) and then work their way down.

This squeeze is challenging for niche retail sites or other sites with a significant trust barrier but limited friction in their process which allows bigger competitors to compete at lower margins. Even if you have better product organization & presentation, many people who find what they want on your site are likely to buy it on Amazon because they already have an account there & it is hard to beat Amazon on pricing.

As Google promotes known brands across a broader array of search queries, they can use the end user clickstream data to determine if they are leaning on the signal too heavily, and adjust downward the rankings of brands where the association is an algorithmic mistake (as few searchers click on it) or associations which do not fit a user's needs for other reasons (for example, if Google ranks Tesco.com high in the United States for [baby milk] when the cost of shipping internationally would be prohibitive for most consumers, any consumer who clicks on that listing is likely to click back and then select a different listing).

Bing is also testing featuring brands in search results on generic search queries. Some of the tests they have done have included the brands in the right rail knowledge graph rather than in the regular organic search results.

Where We Are Headed

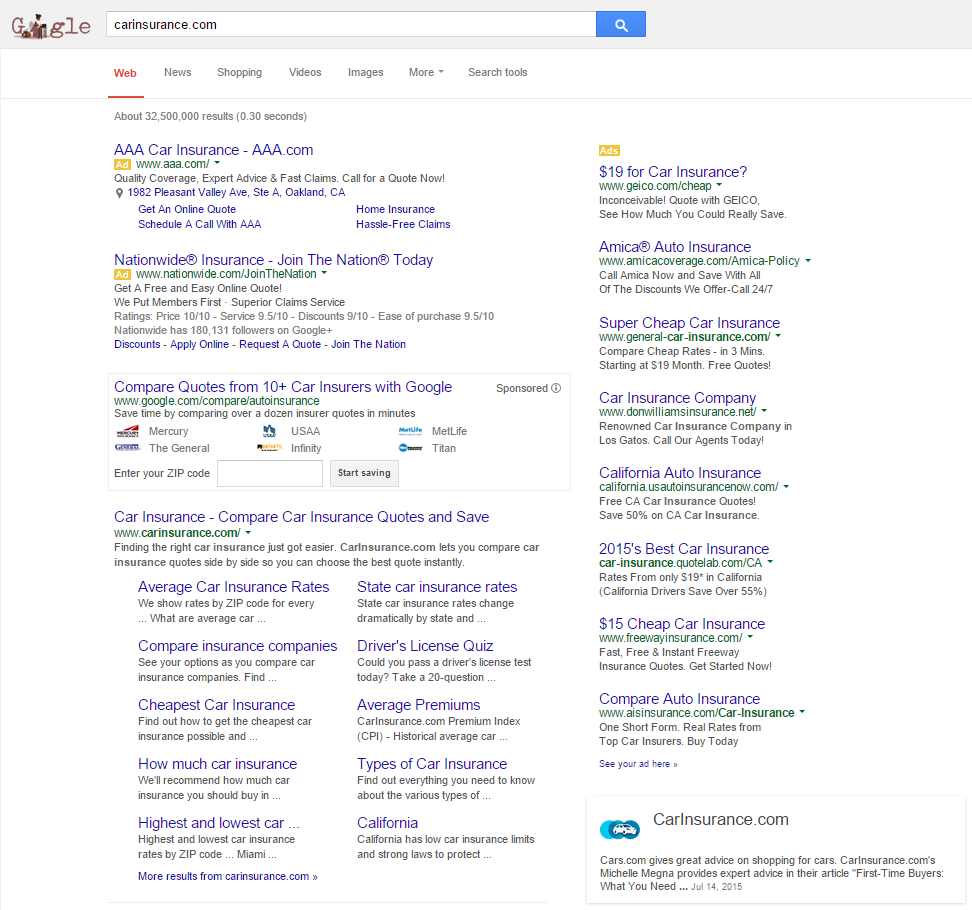

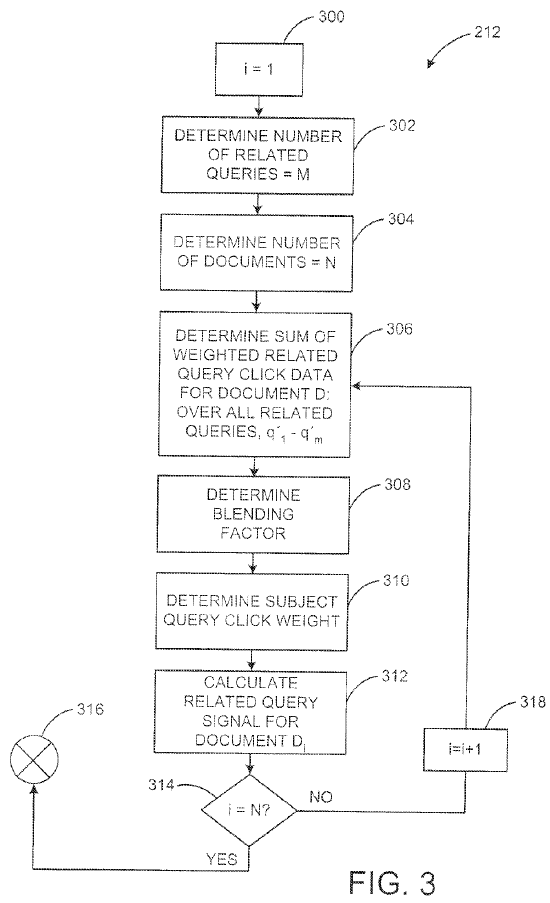

One of the above referenced patents mentioned how Google could fold navigational resources earlier into the search funnel, however Google can also use similar parallel signals for non-navigational search queries. The patent Methods and systems for improving a search ranking using related queries has an abstract which reads:

Systems and methods that improve search rankings for a search query by using data associated with queries related to the search query are described. In one aspect, a search query is received, a related query related to the search query is determined, an article (such as a web page) associated with the search query is determined, and a ranking score for the article based at least in part on data associated with the related query is determined. Several algorithms and types of data associated with related queries useful in carrying out such systems and methods are described.

Google has a variety of ways to determine if keywords are related.

- queries entered back to back

- queries entered with a short period of time between them

- queries which contain a common term

- edit distance between terms

- keyword co-occurrence in web documents

- n-gram data from their book scanning project

- clustered news articles & topics

- etc.

Google can estimate the similarity between keywords and then fold clicks data from documents ranking for parallel terms as a relevancy signal back into altering the rankings on the current keyword.

And, in addition to folding the weighted click data from related keywords back into the rankings of pages for the current term, Google can also use related terms to rewrite user search queries into more popular search queries where Google has a higher confidence in the result quality by substituting related terms. There are dozens of different patents like this, this or that on modifying search queries to better match user intent.

Google can also use the click stream data & topic modeling of selected pages to assign the dominant user intent for a keyword with multiple potential intents. Here is an image from their patent on propagating query classifications.

Each additional layer of data transformation surfaces more layers of confirmation bias, while the unknown has more new ways to become less known!

Niche sites will continue dying off:

there’s no reason why the internet couldn’t keep on its present course for years to come. Under those circumstances, it would shed most of the features that make it popular with today’s avant-garde, and become one more centralized, regulated, vacuous mass medium, packed to the bursting point with corporate advertising and lowest-common-denominator content, with dissenting voices and alternative culture shut out or shoved into corners where nobody ever looks. That’s the normal trajectory of an information technology in today’s industrial civilization, after all; it’s what happened with radio and television in their day, as the gaudy and grandiose claims of the early years gave way to the crass commercial realities of the mature forms of each medium.

As users spend more times on social sites & other closed vertical portals which leverage their walled gardens to have superior ad targeting data, media creators will follow audiences, and TV may well become the model for the web as small businesses are defunded & audiences move on.

Eventually they might even symbolically close their websites, finishing the job they started when they all stopped paying attention to what their front pages looked like. Then, they will do a whole lot of what they already do, according to the demands of their new venues. They will report news and tell stories and post garbage and make mistakes. They will be given new metrics that are both more shallow and more urgent than ever before; they will adapt to them, all the while avoiding, as is tradition, honest discussions about the relationship between success and quality and self-respect.

...

If in five years I’m just watching NFL-endorsed ESPN clips through a syndication deal with a messaging app, and Vice is just an age-skewed Viacom with better audience data, and I’m looking up the same trivia on Genius instead of Wikipedia, and “publications” are just content agencies that solve temporary optimization issues for much larger platforms, what will have been point of the last twenty years of creating things for the web?

Panda - Comparing Navigational Searches to a Site's Link Profile

Common Ways to Generate Awareness Signals

Google has advertised that buying display ads drives branded search queries.

If display advertising provides that type of lift, then advertising directly in the search results for relevant terms builds consumer awareness & leads to subsequent branded search volume.

As search engines have replaced address bars with search boxes, search has replaced direct navigation for an increasing share of the population. Any large organization will generate some amount of branded search volume by virtue of its size. Some examples below:

- employees may need to log in regularly to see important company news, changes to work policies, updates to their work schedules, check company email, etc. (this also applies to other organizations like non-profits, colleges, and military organizations)

- affiliates, suppliers & other business partners may need to log into sections of a site to find fresh promotions or product inventory needs

- publicly traded companies have employees with stock options, investors, stock analysts, etc. who regularly read their financial news and tune into their quarterly reports

- a person who regularly logs into their bank account or pays their utility bills online will likely perform many branded search queries (the risk of getting hacked by typing a URL incorrectly makes people more likely to visit some types of sites directly through the search channel)

- offline stores are like interactive billboards where you can buy products, or return/exchange broken products

- newspapers can invite readers to access extended versions of stories online & offer other benefits online

- coupon circulars, sweepstakes contests, and warranty polices can drive consumers to websites

- email lists, having a following on social channels, etc. can drive subsequent search volume (especially given how people consume media across multiple devices like their cell phone or their work computer & may prefer to transact on desktop computers while at home)

- etc.

Almost any form of awareness bleeds over to better aggregate engagement metrics.

Links Without Awareness = Trouble

The day Panda rolled out, my theory was "perhaps Google is looking at somehow folding brand search traffic into a signal directly in the web's link graph?"

A site which is held up by "just links" but has no employees, few customers, no offline stores, no following on social, no email list, almost nobody looking specifically for it, etc. may have a hard time creating enough usage signals to justify the existence of their link profile.

Bill Slawski was one of the first SEOs to cover the panda patent, named Ranking Search Results.

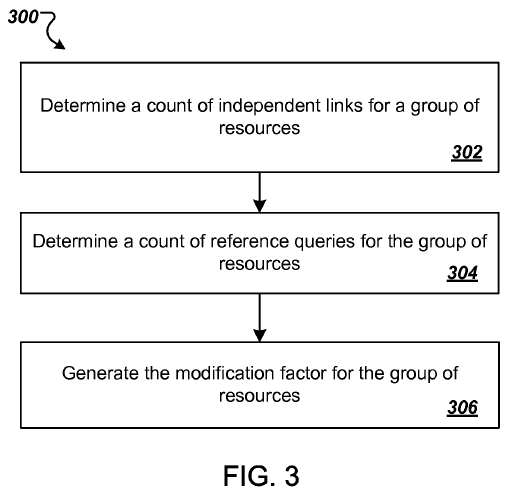

One of the methods includes determining, for each of a plurality of groups of resources, a respective count of independent incoming links to resources in the group; determining, for each of the plurality of groups of resources, a respective count of reference queries; determining, for each of the plurality of groups of resources, a respective group-specific modification factor, wherein the group-specific modification factor for each group is based on the count of independent links and the count of reference queries for the group; and associating, with each of the plurality of groups of resources, the respective group-specific modification factor for the group, wherein the respective group-specific modification for the group modifies the initial scores generated for resources in the group in response to received search queries.

Prior to the Panda update, Google would primarily count positive metrics, and typically ignore most other metrics. Panda was really the first widespread algorithmic situation where some typical relevancy signals which counted for you could start counting against you. Key to Panda was the combination of folding in usage data and analyzing the ratio of various signals. Suddenly, relying too heavily on a particular signal (like links) while failing to build broad consumer awareness could then make the links count against you rather than counting for you.

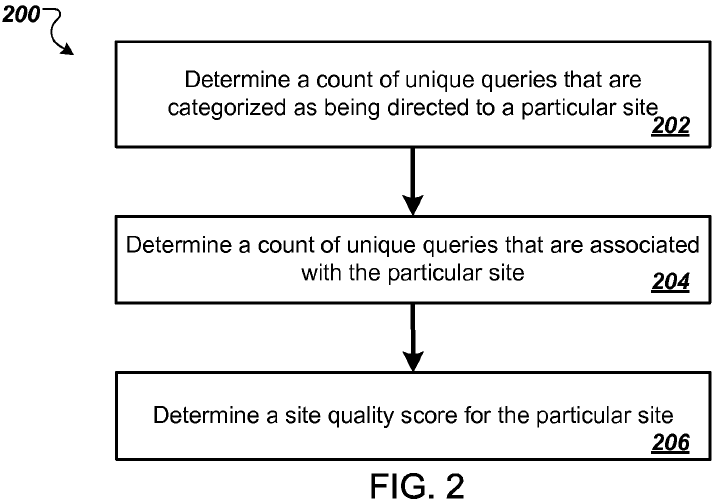

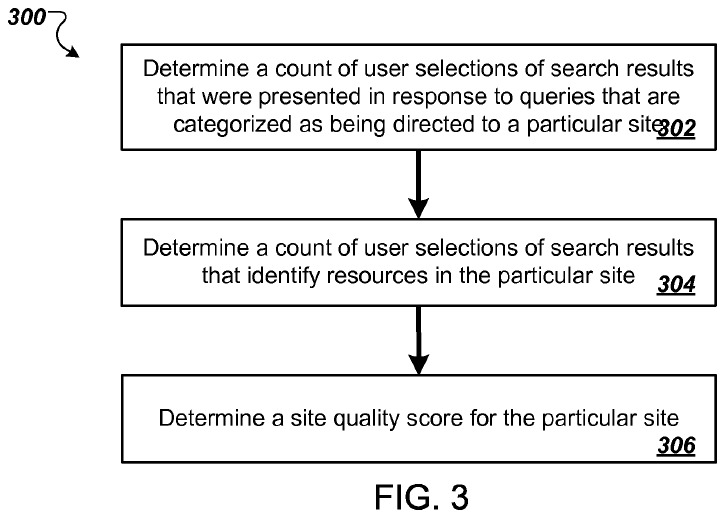

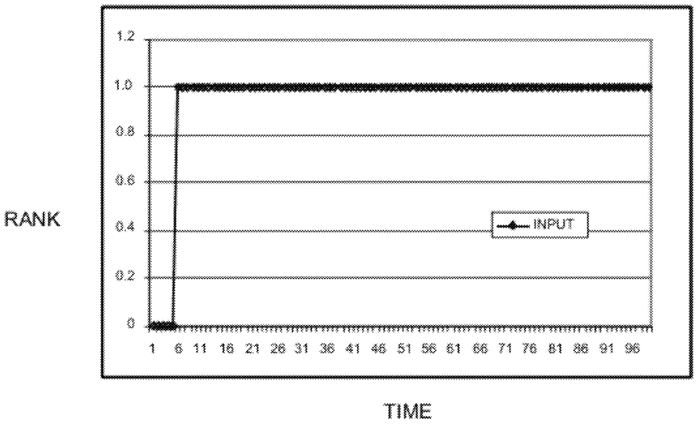

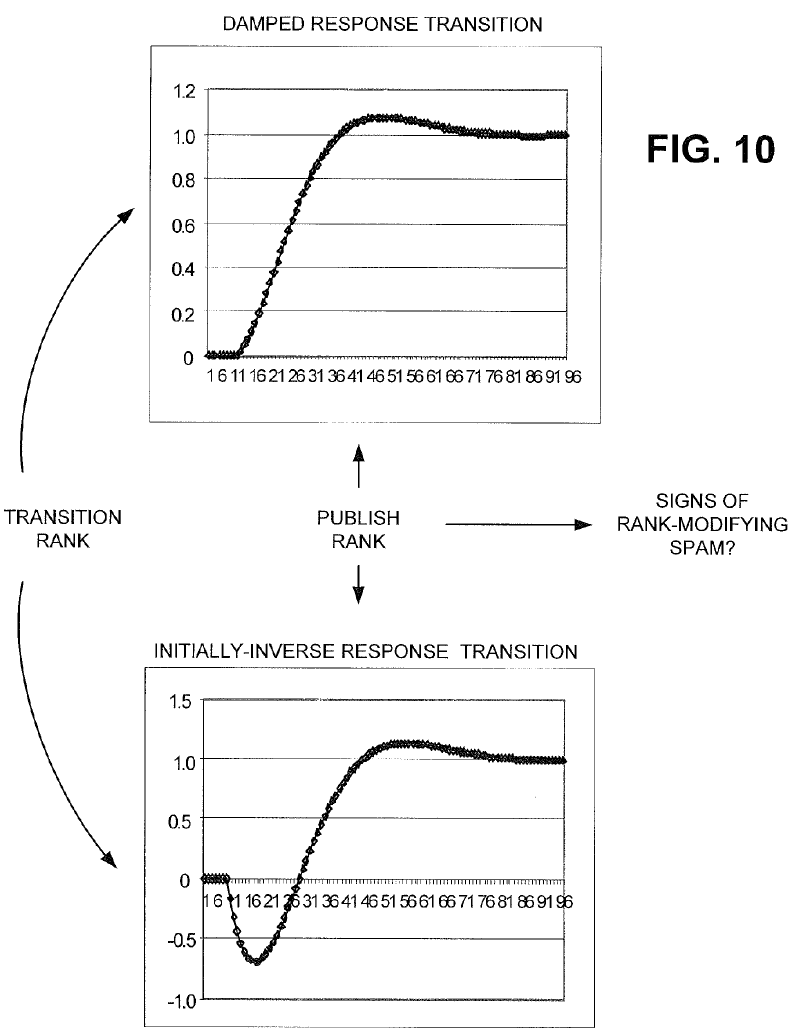

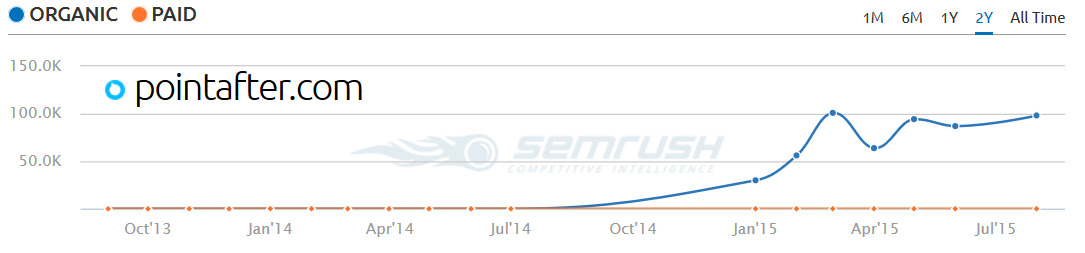

Here are a couple images from the Panda patent. The first shows how they compare unique linking domains from unaffiliated sites versus branded or navigational reference search queries which imply the user is looking for a specific site.

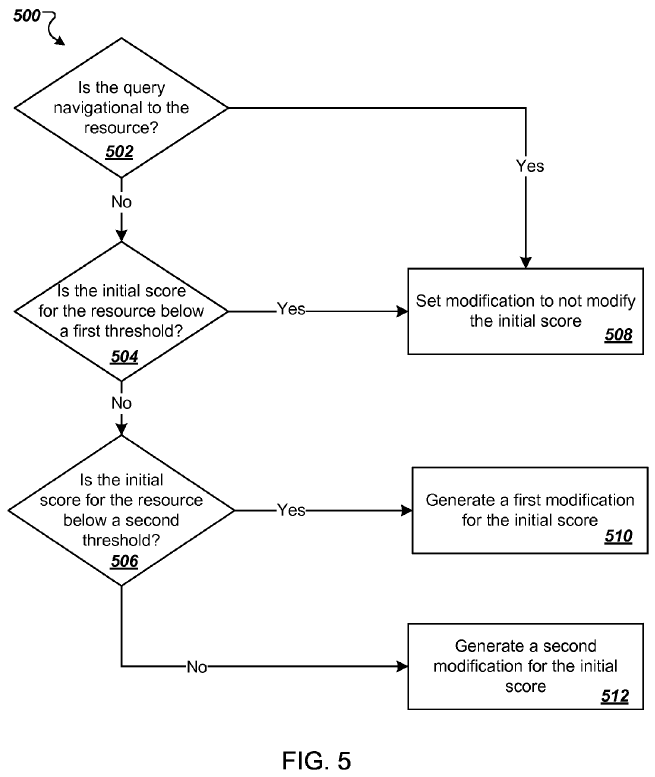

The second image shows that if the query is navigational they do not enable Panda to lower the ranking of the top ranked site, that way even a site hit by Panda is still allowed to rank for its brand-related search terms (provided those brand-related terms are not also industry generic terms & that there isn't a larger + more popular parallel parallel brand using the same name). This allows Google to aggressively penalize sites without making people realize they are missing, because they still show up when people specifically search for them.

Panda can impact sites positively, negatively, or have a roughly neutral impact.

- If lots of people are searching for your site (relative to the link count) then you can get a strong rank boost. A site like Amazon, which has many people looking for it, gets a ranking boost.

- If you have a decent number of navigational searches & a decent number of links your site's ranking scores might not change (though other sites around your site may still rise or fall due to positive or negative Panda-scores). Many sites in this category still saw significant ranking shifts due to sites like Amazon.com, eBay.com & WalMart.com rising.

- If your site has few people looking for it (relative to the size of the link profile) then your site's ranking scores are negatively impacted by Panda. A site like Mahalo, which was a glorified scraper site propped up by link schemes & didn't publish particularly useful content, saw its rankings tank.

Google can also normalize the modification factor so they apply them differently to sites of different sizes and levels levels of awareness.

Panda Evolves

Some smaller sites which were not hit by the first version of Panda were not hit because Google first wanted to go after the most egregious examples & then as Google gained more confidence in their modeling they were able to apply the algorithm with increased granularity.

In one speech Matt Cutts mentioned that Panda sort of worked like a thermostat, so while the above 3 buckets exist, there can be additional buckets, or degrees of impact within each bucket.

The Panda patent mentioned scoring working based on "a group of resources."

The initial version of Panda's worked at a subdomain level. When HubPages highlighted anti-trust related concerns over the change, Matt Cutts gave them the advice to have their individual authors publish content on their own subdomains, which made it easier for Google to create author-specific ratings. Some spammers took advantage of the subdomain-related aspect by rotating subdomains each time Google did a Panda update, though Google eventually closed that hole. Google later adjusted Panda to allow more granular operation down to tighter levels beyond subdomains, like at a folder level.

At some points in time Google has mentioned Panda was being folded into their main algorithm, that it was automatically updated, that it was updated in real time, etc. But they later walked back some of that messaging and referenced that the computationally expensive process had to be manually run. It is run offline & is not frequently updated, much to the chagrin of SEOs.

And as people have become more aware of some of the types of signals used by Panda, Google has resorted to updating Panda less frequently, in order to make manipulating the signals more expensive & to add friction to client communications for those selling recovery services. To add further friction, Google frequently promises "update coming soon" and then falls back on "technical issues."

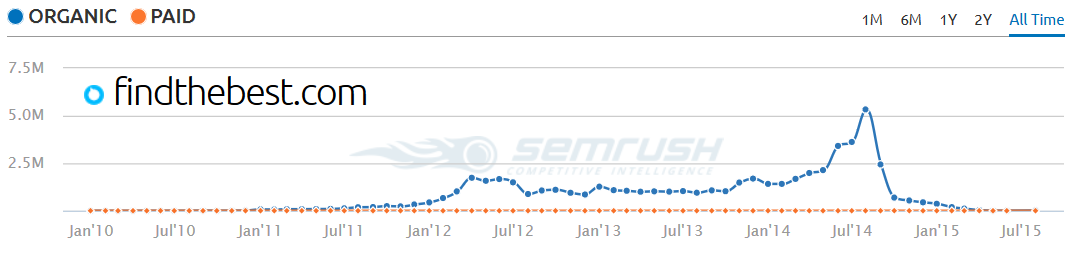

The Golden Ratio: Brand Searches vs Total Search Traffic

The concept of comparing ratios of signals from the original Panda patent also appeared in some follow up patents by Google's Navneet Panda.

Once again, Bill Slawski was one of the first SEOs to analyze another Navneet Panda patent named Site Quality Score.

Methods, systems, and apparatus, including computer programs encoded on computer storage media, for determining a first count of unique queries, received by a search engine, that are categorized as referring to a particular site; determining a second count of unique queries, received by the search engine, that are associated with the particular site, wherein a query is associated with the particular site when the query is followed by a user selection of a search result that (a) was presented, by the search engine, in response to the query and (b) identifies a resource in the particular site; and determining, based on the first and second counts, a site quality score for the particular site.

The primary objective of this patent is to once again leverage navigational or brand-related reference queries as a relevancy signal, but this time it is done by comparing the ratio of a.) unique brand-related search keywords to total keyword count associated with a site; and b.) search traffic from those branded queries to overall search traffic into a site.

What this sort of patent does is subsidize any site which has a well known brand, while punishing sites which try to build a broad-base of longtail search traffic without investing in brand building.

- Losing: Sites which aim to offer a quick answer without making a strong impression (or sites which try to trick people into clicking on ads while serving no other purpose) lose. Sites offering nothing unique lose. Sites which are generic and undifferentiated lose. Sites with a poor user experience lose.

- Winning: Sites which have broad consumer awareness win. Sites which have deep immersive user experiences which make people want to register accounts on them and go back to them frequently win. This sort of signal once again benefits sites like Amazon.com.

If you manage to rank well, but almost nobody repeatedly visits your site or actively seeks you out, then you get hit. If you have broad awareness from other channels or the people who find your site via search keep coming back to it, then you win.

Signal Bleed

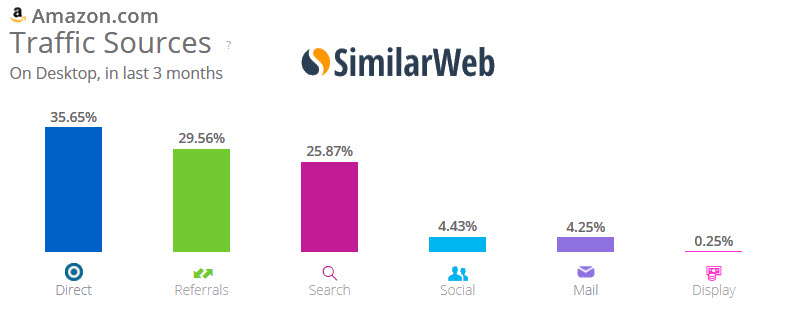

It makes sense that search usage is one of Google's cleanest signals to use, since they own the user experience & can directly track when things fail. The above patent mentions (brand-related & navigational) reference queries as a signal. But there is no reason Google couldn't look at other data sources for related signals. A Google engineer mentioned Google looks at app usage data for recommending apps. Similarly, Google could use the Chrome address bar to augment some of the brand-related search query data.

Do more people visit your site directly rather than via search? Seeing a bunch of logged in Chrome users repeatedly visit your site is a sign of quality, so Google can lean into that direct traffic stream as a signal to subsidize allowing your site to rank better.

Estimating New Sites

The above sorts of ratios are easy to use on existing well-established sites. But they don't work well on a brand new site which has not yet earned a strong rank & does not yet have many inbound links.

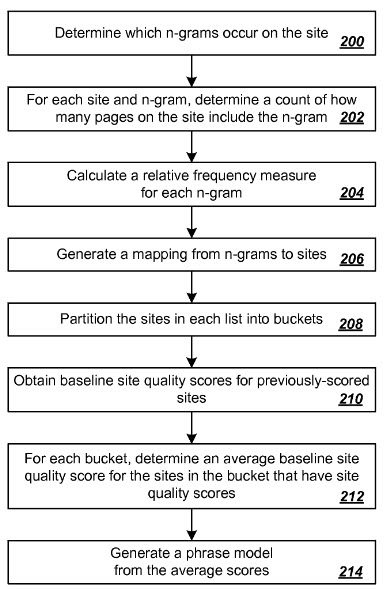

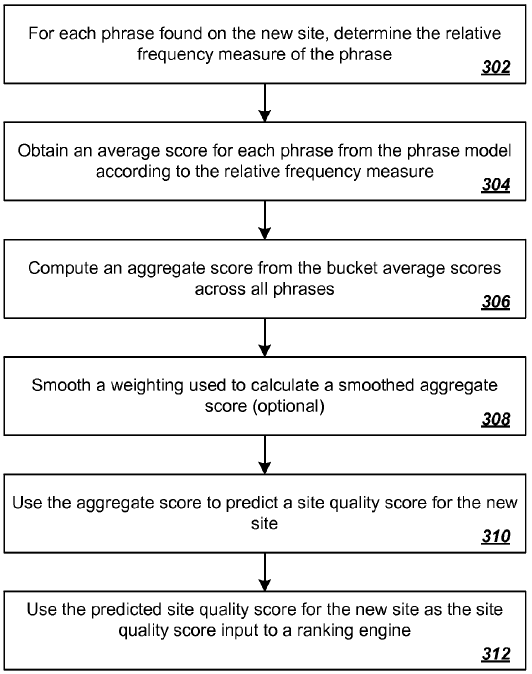

Bill Slawski mentioned another Navneet Panda patent on Predicting Site Quality.

In some implementations, the methods [for predicting a quality score] include obtaining baseline site quality scores for multiple previously scored sites;

- Generating a phrase model for multiple sites including the previously scored sites, wherein the phrase model defines a mapping from phrase specific relative frequency measures to phrase specific baseline site quality scores;

- For a new site that is not one of the previously scored sites, obtaining a relative frequency measure for each of a plurality of phrases in the new site;

- Determining an aggregate site quality score for the new site from the phrase model using the relative frequency measures of phrases in the new site; and

Determining a predicted site quality score for the new site from the aggregate site quality score.

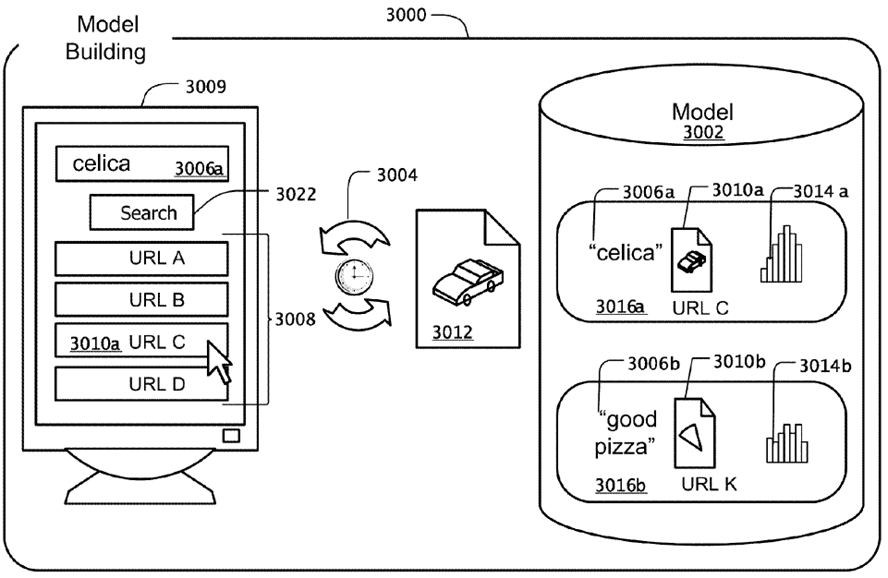

Here are a couple pictures from that patent.

Google views most new sites with suspicion: guilty until proven innocent. If another site doesn't have any pulse of consumer demand and awareness, then if it primarily recycles content already available elsewhere online, Google probably doesn't want the additional duplication in their search ecosystem.

A new site which has an n-gram footprint similar to other sites which were deemed to be of lower quality may get hit due to on-page similarities. Think of...

- using the same exact product feed to auto-generate thousands of pages similar to thousands of other low quality duplicative affiliate sites which don't add any value, or

- a new & unknown merchant who sets up a new shop using default product descriptions while adding nothing else to the page (to Google an empty store full of recycled content is every bit as bad as a run of the mill affiliate & they've even hit some ecommerce networks of sites with doorway page penalties), or

- scraping an RSS feed which is scraped by thousands of other scraper sites, or

- recycling a bunch of crappy press releases, or

- using free content from article databases Google has already torched, or

- creating a site built on private label rights (PLR) content

- other forms of low-quality duplicate content

All Google would need to do to make such a system work is whitelist a few source exemptions (and such whitelists could be algorithmically generated based on having minimum trust-signal thresholds) & then other players syndicating Wikipedia, DMOZ, Amazon.com affiliate listings, and other affiliate feeds and such could easily automatically smoke themselves.

Many other sites using the same (cheap, easy, fast, scalable) content sources & content will have already been penalized by Panda, algorithmic link-based penalties, or manual penalties. Their site's lack of consumer awareness & engagement (it is hard to differentiate an offering when it is a copy of a widely freely available piece of content) combined with a similar footprint makes it easy for Google to choose not to trust parallel newer sites unless they manage to vastly differentiate their profiles from the untrusted sites.

In the past Google has mentioned they moved some aspects of their duplicate detection process earlier in the crawl cycle. In addition to using the above n-gram data for determining estimated quality, they can use that same sort of data for things like flagging sites for manual reviews, and other algorithmic processes like duplicate detection & determining which canonical source they want to rank.

Before Panda launched, sometimes I would let "meh" content get indexed while it was still staging, figuring it would take a bit of time to rank & I'd get around to fixing/formatting/improving it before it did. However over the past few years I've tried to be much more cautious with what I let in the index & try to ensure it is ready to go from day one. A lot of writing ends up being quite keyword dense due to many freelance writers being trained as that being the best way to do things from SEO experience over the years. If you work with a few writers frequently you can ensure that isn't a problem, but anyone ordering loads of content is likely going to get a lot of keyword dense repetition.

If you have enough trust built up (high-quality links, repeat visitors, branded searches, etc.) you can be an outlier many ways over in many areas & still rank fine. But if you don't have those signals built up (and backed up by years of high ratings from Google's remote raters), then the more your profile is aligned with other sites Google is demoting the more likely you are to step into an automatic demotion.

Aging a New Site: The Golden Ratio, Part 2

Prior to panda the idea of age-based trust and building a site to start the aging process was quite wise. But with panda & penguin active, you have to think about ratios with everything you do.

If you have a small enough footprint, sure start the aging process. But the more aggressively you scale things, the more aggressively you must scale awareness building in conjunction with link building and content building.

- ratio of quality links to low quality links

- ratio of branded anchors to keyword rich anchors

- recent rate of link acquisition compared to past link acquisition

- ratio of externally supported pages to unsupported pages

- ratio of links to branded or navigational searches

- ratio of total search visits to branded or navigational searches

- ratio of traffic from search vs traffic from other channels

- ratio of new visits to repeat visits

- ratio of your CTR when your site ranks compared to other sites which rank at that same position for that keyword

- ratio of higher quality keywords you rank for versus lower quality keywords (and the quality of other sites with a similar keyword ranking skew/footprint)

- the ratio of long click visitors on your site (search visitors who stay on your page for an extended period of time without clicking back to Google) versus short click visitors (those who quickly go back to Google)

- ratio of search visitors to your site to the search visitors to your site that click back to Google and then click onto another listing

- ratio of unique content to duplicated content (and the average quality of the majority of other sites which have published that same duplicated content)

- ratio of keyword density within the content (and the average quality of the majority of other documents with a similar keyword density)

- ratio of the main keyword in your content versus supporting related concepts (and the average quality of the majority of other documents with a similar footprint)

- how well your supporting phrases are aligned with those in other top ranked documents

- freshness of your document & the frequency your document is updated (when compared against other top ranking documents for the same keywords)

- etc.

Some shortcuts in isolation may work to boost a site's exposure, but a site which takes many shortcuts in parallel is likely to stand out as a statistical outlier & get classified as being associated with other sites which have already been demoted.

The Trap

By 2010 (even before Panda rolled out) it became clear Google was going to lean hard into setting traps & tricking SEOs into suffocating from negative feedback spirals.

So long as a site's owner is focused exclusively on the organic search channel (& is not focused on building awareness via other channels) then the penalties can almost become self-reinforcing. Webmasters chasing their own tails are not focused on making progress in the broader market. Until the webmaster finds a way to create demand and awareness which then feeds through as branded search queries & user habits, it can be hard to recover.

Many people who are hit by Panda quickly rush off and start disavowing links, but those efforts are removing relevancy signals & if they get rid of good links they are only further lowering their awareness in the marketplace (rather than focusing on building awareness). The only sustainable long-lasting solution is creating demand and awareness. And if your organic search performance has a dampener on it, that means you need to (at least temporarily) focus awareness-building marketing strategies on other channels.

There might be a variety of on-site clean up issues which need to happen in terms of duplication, usability, and so on. But for many sites to recover from a Panda hit you also have to build awareness elsewhere.

General Usage Data Usage

Usage Statistics

Google has a patent named Methods and apparatus for employing usage statistics in document retrieval which is somewhat related to the concepts behind DirectHit. It mentions folding in general web usage data into the relevancy algorithms. The abstract reads:

Methods and apparatus consistent with the invention provide improved organization of documents responsive to a search query. In one embodiment, a search query is received and a list of responsive documents is identified. The responsive documents are organized based in whole or in part on usage statistics.

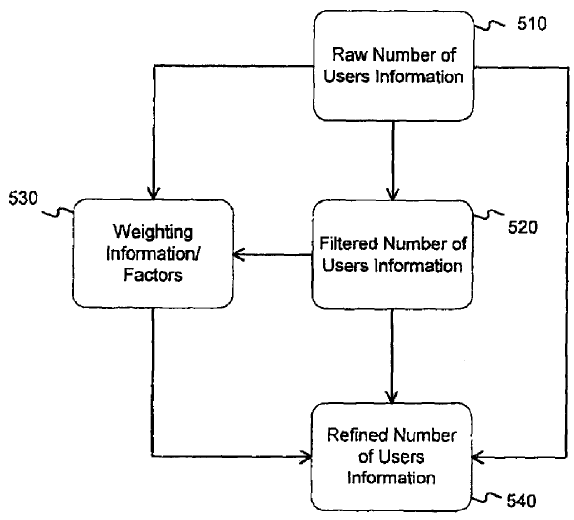

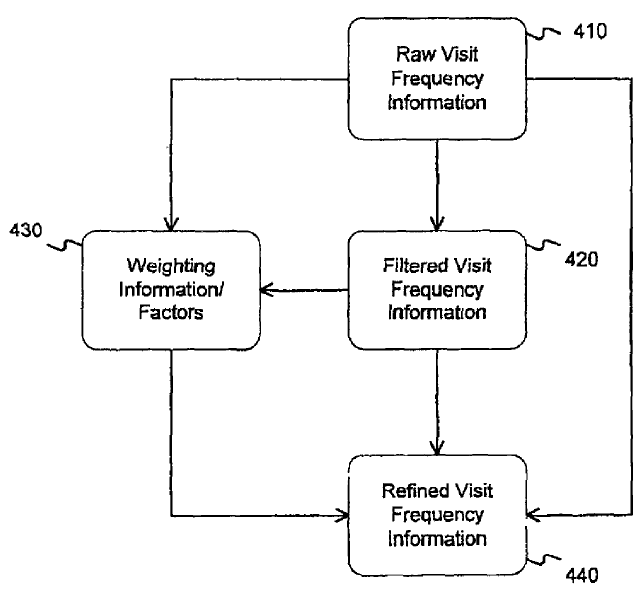

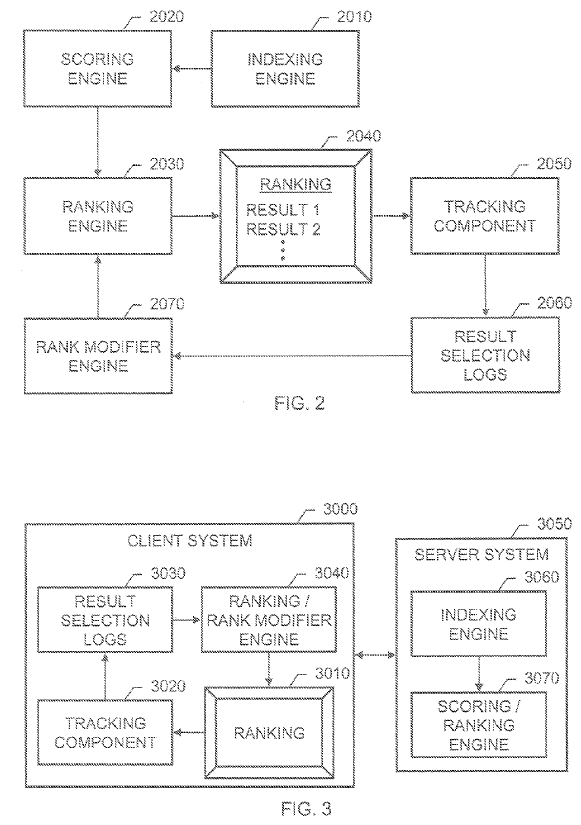

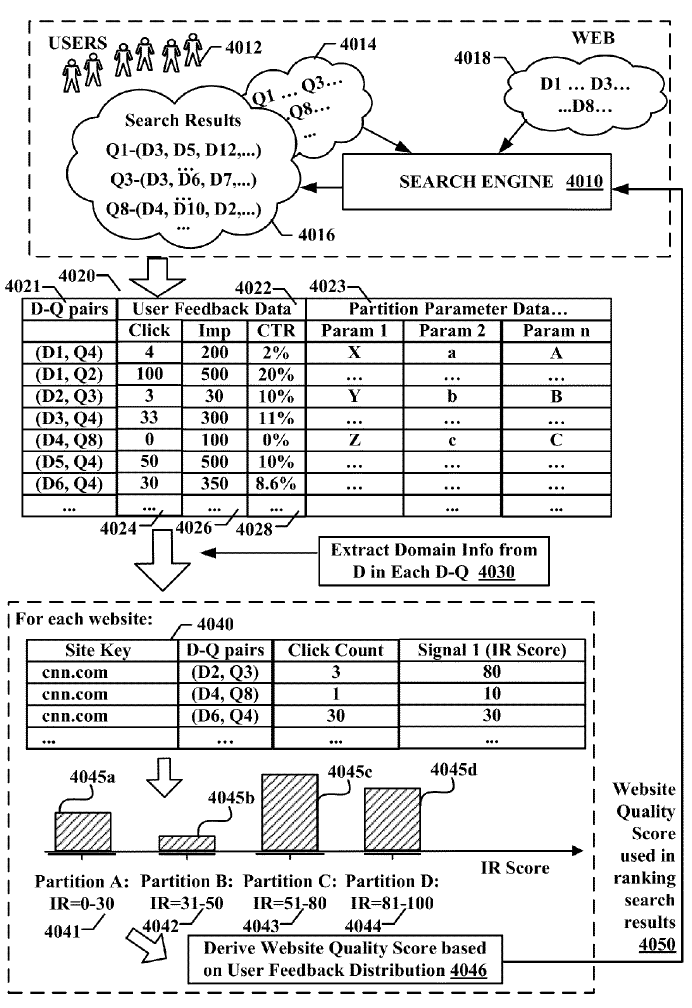

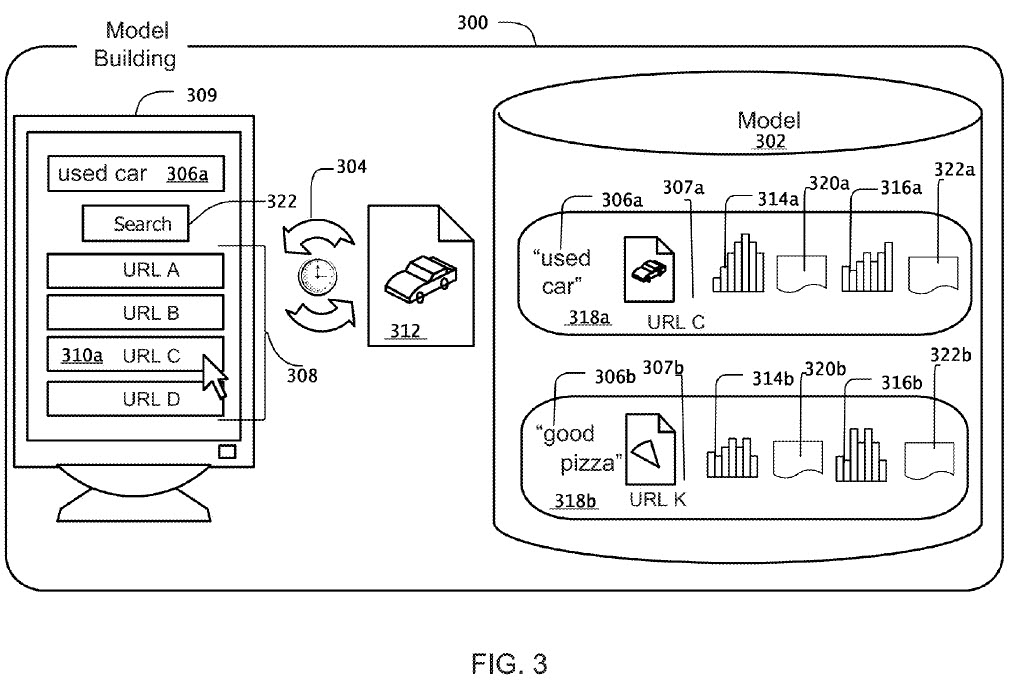

Here are a couple images from the patent which show the rough concepts:

- the number of visitors to a document (based in-part on cookies and/or IP address)

- the frequency of visits to a document

- methods of filtering out automated traffic & traffic from the document owner/maintainer

- weighing the visit data differently across different geographic regions to localize the data to the user (and perhaps weighing other data sets differently to count data more from browsers or user account types which they have more data on and a better trust in the signal quality)

Rather than looking at the data on a per-document basis they can aggregate the information across a site, so that repeat visits to a home page of a news site or some other interactive site could in turn help subsidize the rankings of internal pages on that same site. Here is a description of one implementation type:

In one implementation, documents are organized based on a total score that represents the product of a usage score and a standard query-term-based score (“IR score”). In particular, the total score equals the square root of the IR score multiplied by the usage score. The usage score, in turn, equals a frequency of visit score multiplied by a unique user score multiplied by a path length score.